Ace Your Professional Cloud Developer with Practice Exams.

Google Cloud Certified – Professional Cloud Developer Practice Exam (Q 255)

Question 001

You want to upload files from an on-premises virtual machine to Google Cloud Storage as part of a data migration.

These files will be consumed by Cloud Dataproc Hadoop cluster in a GCP environment.

Which command should you use?

- A. gsutil cp [LOCAL_OBJECT] gs://[DESTINATION_BUCKET_NAME]/

- B. gcloud cp [LOCAL_OBJECT] gs://[DESTINATION_BUCKET_NAME]/

- C. hadoop fs cp [LOCAL_OBJECT] gs://[DESTINATION_BUCKET_NAME]/

- D. gcloud dataproc cp [LOCAL_OBJECT] gs://[DESTINATION_BUCKET_NAME]/

Answer: A

The gsutil cp command allows you to copy data between your local file. storage. boto files generated by running “gsutil config”

Question 002

You migrated your applications to Google Cloud Platform and kept your existing monitoring platform.

You now find that your notification system is too slow for time critical problems.

What should you do?

- A. Replace your entire monitoring platform with Stackdriver.

- B. Install the Stackdriver agents on your Compute Engine instances.

- C. Use Stackdriver to capture and alert on logs, then ship them to your existing platform.

- D. Migrate some traffic back to your old platform and perform AB testing on the two platforms concurrently.

Answer: A

Reference:

– Cloud Monitoring

Question 003

You are planning to migrate a MySQL database to the managed Cloud SQL database for Google Cloud.

You have Compute Engine virtual machine instances that will connect with this Cloud SQL instance. You do not want to whitelist IPs for the Compute Engine instances to be able to access Cloud SQL.

What should you do?

- A. Enable private IP for the Cloud SQL instance.

- B. Whitelist a project to access Cloud SQL, and add Compute Engine instances in the whitelisted project.

- C. Create a role in Cloud SQL that allows access to the database from external instances, and assign the Compute Engine instances to that role.

- D. Create a Cloud SQL instance on one project. Create Compute engine instances in a different project. Create a VPN between these two projects to allow internal access to Cloud SQL.

Answer: A

Reference:

– About Cloud SQL connections | Cloud SQL for MySQL | Google Cloud

Question 004

You have deployed an HTTP(s) Load Balancer with the gcloud commands shown below.

export NAME-load-balancer

#create network

gcloud compute networks create $(NAME}

#add instance.

gcloud compute instances create $ (NAME)-backend-instance-1 --subnet $(NAME -no address

# create the instance group

gcloud compute instance-groups unmanaged create ${NAME}-i

gcloud compute instance-groups unmanaged set-named-ports ${NAME}-i -named-ports http:80

gcloud compute instance-groups unmanaged add-instances $(NAME)-i-instances $(NAME)-instance-1

#configure health checks

gcloud compute health-checks create http $(NAME)-http-hc --port 80

# create backend service

gcloud compute backend-services create $(NAME)-http-bes --health-checks ${NAME}-http-hc --protocol HTTP --port-name http --global

gcloud compute backend-services add-backend $(NAME)-http-bes --instance-group $(NAME)-i-balancing-mode RATE --max-rate 100000 --capacity-scaler 1.0-global-instance-group-zone us-east1-d

# create urls maps and forwarding rule

gcloud compute url-maps create $(NAME)-http-urlmap --default-service ${NAME}-http-bes

gcloud compute target-http-proxies create $(NAME)-http-proxy --url-map $(NAME)-http-urlmap

gcloud compute forwarding-rules create $(NAME)-http-fw --global-ip-protocol ICP --target-http-proxy ${NAME}-http-proxy

-ports 80Health checks to port 80 on the Compute Engine virtual machine instance are failing and no traffic is sent to your instances. You want to resolve the problem.

Which commands should you run?

- A. gcloud compute instances add-access-config ${NAME}-backend-instance-1

- B. gcloud compute instances add-tags ${NAME}-backend-instance-1 –tags http-server

- C. gcloud compute firewall-rules create allow-lb –network load-balancer –allow tcp –source-ranges 130.211.0.0/22,35.191.0.0/16 –direction INGRESS

- D. gcloud compute firewall-rules create allow-lb –network load-balancer –allow tcp –destination-ranges 130.211.0.0/22,35.191.0.0/16 –direction EGRESS

Answer: C

Reference:

– Configure VMs for networking use cases | VPC | Google Cloud

Question 005

Your website is deployed on Compute Engine.

Your marketing team wants to test conversion rates between 3 different website designs.

Which approach should you use?

- A. Deploy the website on App Engine and use traffic splitting.

- B. Deploy the website on App Engine as three separate services.

- C. Deploy the website on Cloud Functions and use traffic splitting.

- D. Deploy the website on Cloud Functions as three separate functions.

Answer: A

Reference:

– Splitting Traffic | App Engine standard environment for Python 2 | Google Cloud

Question 006

You need to copy directory local-scripts and all of its contents from your local workstation to a Compute Engine virtual machine instance.

Which command should you use?

- A. gsutil cp –project my-gcp-project -r ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone us-east1-b

- B. gsutil cp –project my-gcp-project -R ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone us-east1-b

- C. gcloud compute scp –project my-gcp-project –recurse ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone us-east1-b

- D. gcloud compute mv –project my-gcp-project –recurse ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone us-east1-b

Answer: C

Reference:

– gcloud compute copy-files | Google Cloud CLI Documentation

Question 007

You are deploying your application to a Compute Engine virtual machine instance with the Stackdriver Monitoring Agent installed.

Your application is a UNIX process on the instance. You want to be alerted if the UNIX process has not run for at least 5 minutes. You are not able to change the application to generate metrics or logs.

Which alert condition should you configure?

- A. Uptime check

- B. Process health

- C. Metric absence

- D. Metric threshold

Answer: B

Reference:

– Behavior of metric-based alerting policies | Cloud Monitoring

Question 008

You have two tables in an ANSI-SQL compliant database with identical columns that you need to quickly combine into a single table, removing duplicate rows from the result set.

What should you do?

- A. Use the JOIN operator in SQL to combine the tables.

- B. Use nested WITH statements to combine the tables.

- C. Use the UNION operator in SQL to combine the tables.

- D. Use the UNION ALL operator in SQL to combine the tables.

Answer: C

Reference:

– SQL: UNION ALL Operator

Question 009

You have an application deployed in production.

When a new version is deployed, some issues don’t arise until the application receives traffic from users in production. You want to reduce both the impact and the number of users affected.

Which deployment strategy should you use?

- A. Blue/green deployment

- B. Canary deployment

- C. Rolling deployment

- D. Recreate deployment

Answer: B

Reference:

– Six Strategies for Application Deployment – The New Stack

Question 010

Your company wants to expand their users outside the United States for their popular application.

The company wants to ensure 99.999% availability of the database for their application and also wants to minimize the read latency for their users across the globe.

Which two actions should they take? (Choose two.)

- A. Create a multi-regional Cloud Spanner instance with “nam-eur-asia1” configuration.

- B. Create a multi-regional Cloud Spanner instance with “nam3” configuration.

- C. Create a cluster with at least 3 Spanner nodes.

- D. Create a cluster with at least 1 Spanner node.

- E. Create a minimum of two Cloud Spanner instances in separate regions with at least one node.

- F. Create a Cloud Dataflow pipeline to replicate data across different databases.

Answer: A, C

Question 011

You need to migrate an internal file upload API with an enforced 500-MB file size limit to App Engine.

What should you do?

- A. Use FTP to upload files.

- B. Use CPanel to upload files.

- C. Use signed URLs to upload files.

- D. Change the API to be a multipart file upload API.

Answer: C

Reference:

– Google Cloud Platform – Christoph’s Personal Wiki

Question 012

You are planning to deploy your application in a Google Kubernetes Engine (GKE) cluster.

The application exposes an HTTP-based health check at /healthz. You want to use this health check endpoint to determine whether traffic should be routed to the pod by the load balancer.

Which code snippet should you include in your Pod configuration?

- A.

livenessProbe:

httpGet:

path: /healthz

port: 80 - B.

readinessProbe:

httpGet:

path: /healthz

port: 80 - C.

loadbalancerHealthCheck:

httpGet:

path: /healthz

port: 80 - D.

healthCheck:

httpGet:

path: /healthz

port: 80

Answer: B

For the GKE ingress controller to use your readiness Probes as health checks, the Pods for an Ingress must exist at the time of Ingress creation. If your replicas are scaled to 0, the default health check will apply.

Question 013

Your teammate has asked you to review the code below.

It’s purpose is to efficiently add a large number of small rows to a BigQuery table.

BigQuery service = BigQueryOptions.newBuilder().build().getService();

public void writeToBigQuery (Collection<Map<String, String>> rows){

for (Map<String, String> row: rows) {

InsertAllRequest insertRequest = InsertAllRequest.newBuilder (

"datasetId", "tableId",

InsertAllRequest.RowToInsert. of (row)) .build();

service.insertAll (insertRequest);

}

}Which improvement should you suggest your teammate make?

- A. Include multiple rows with each request.

- B. Perform the inserts in parallel by creating multiple threads.

- C. Write each row to a Cloud Storage object, then load into BigQuery.

- D. Write each row to a Cloud Storage object in parallel, then load into BigQuery.

Answer: A

Question 014

You are developing a JPEG image-resizing API hosted on Google Kubernetes Engine (GKE).

Callers of the service will exist within the same GKE cluster. You want clients to be able to get the IP address of the service.

What should you do?

- A. Define a GKE Service. Clients should use the name of the A record in Cloud DNS to find the service’s cluster IP address.

- B. Define a GKE Service. Clients should use the service name in the URL to connect to the service.

- C. Define a GKE Endpoint. Clients should get the endpoint name from the appropriate environment variable in the client container.

- D. Define a GKE Endpoint. Clients should get the endpoint name from Cloud DNS.

Answer: B

Question 015

You are using Cloud Build to build and test application source code stored in Cloud Source Repositories.

The build process requires a build tool not available in the Cloud Build environment.

What should you do?

- A. Download the binary from the internet during the build process.

- B. Build a custom cloud builder image and reference the image in your build steps.

- C. Include the binary in your Cloud Source Repositories repository and reference it in your build scripts.

- D. Ask to have the binary added to the Cloud Build environment by filing a feature request against the Cloud Build public Issue Tracker.

Answer: B

Question 016

You are deploying your application to a Compute Engine virtual machine instance.

Your application is configured to write its log files to disk. You want to view the logs in Stackdriver Logging without changing the application code.

What should you do?

- A. Install the Stackdriver Logging Agent and configure it to send the application logs.

- B. Use a Stackdriver Logging Library to log directly from the application to Stackdriver Logging.

- C. Provide the log file folder path in the metadata of the instance to configure it to send the application logs.

- D. Change the application to log to /var/log so that its logs are automatically sent to Stackdriver Logging.

Answer: A

Question 017

Your service adds text to images that it reads from Cloud Storage.

During busy times of the year, requests to Cloud Storage fail with an HTTP 429 “Too Many Requests” status code.

How should you handle this error?

- A. Add a cache-control header to the objects.

- B. Request a quota increase from the GCP Console.

- C. Retry the request with a truncated exponential backoff strategy.

- D. Change the storage class of the Cloud Storage bucket to Multi-regional.

Answer: C

Reference:

– Usage limits | Gmail | Google Developers

Question 018

You are building an API that will be used by Android and iOS apps.

The API must:

– Support HTTPs

– Minimize bandwidth cost

– Integrate easily with mobile apps

Which API architecture should you use?

- A. RESTful APIs

- B. MQTT for APIs

- C. gRPC-based APIs

- D. SOAP-based APIs

Answer: C

Reference:

– How to Build a REST API for Mobile App? – DevTeam.Space

Question 019

Your application takes an input from a user and publishes it to the user’s contacts.

This input is stored in a table in Cloud Spanner. Your application is more sensitive to latency and less sensitive to consistency.

How should you perform reads from Cloud Spanner for this application?

- A. Perform Read-Only transactions.

- B. Perform stale reads using single-read methods.

- C. Perform strong reads using single-read methods.

- D. Perform stale reads using read-write transactions.

Answer: B

Reference:

– Best practices for using Cloud Spanner as a gaming database

Question 020

Your application is deployed in a Google Kubernetes Engine (GKE) cluster.

When a new version of your application is released, your CI/CD tool updates the spec.template.spec.containers[0].image value to reference the Docker image of your new application version. When the Deployment object applies the change, you want to deploy at least 1 replica of the new version and maintain the previous replicas until the new replica is healthy.

Which change should you make to the GKE Deployment object shown below?

apiVersion: apps/v1

kind: Deployment

metadata:

name: ecommerce-frontend-deployme

spec:

replicas: 3

selector:

matchLabels:

app: ecommerce-frontend

template:

metadata:

labels:

app: ecommerce-frontend

spec:

containers

name: ecommerce-frontend-webapp

image: ecommerce-frontend-webapp:1.7.9

ports:

containerPort: 80- A. Set the Deployment strategy to Rolling Update with maxSurge set to 0, maxUnavailable set to 1.

- B. Set the Deployment strategy to Rolling Update with maxSurge set to 1, maxUnavailable set to 0.

- C. Set the Deployment strategy to Recreate with maxSurge set to 0, maxUnavailable set to 1.

- D. Set the Deployment strategy to Recreate with maxSurge set to 1, maxUnavailable set to 0.

Answer: B

Question 021

You plan to make a simple HTML application available on the internet.

This site keeps information about FAQs for your application. The application is static and contains images, HTML, CSS, and Javascript. You want to make this application available on the internet with as few steps as possible.

What should you do?

- A. Upload your application to Cloud Storage.

- B. Upload your application to an App Engine environment.

- C. Create a Compute Engine instance with Apache web server installed. Configure Apache web server to host the application.

- D. Containerize your application first. Deploy this container to Google Kubernetes Engine (GKE) and assign an external IP address to the GKE pod hosting the application.

Answer: A

Reference:

– Host a static website | Cloud Storage

Question 022

Your company has deployed a new API to App Engine Standard environment.

During testing, the API is not behaving as expected. You want to monitor the application over time to diagnose the problem within the application code without redeploying the application.

Which tool should you use?

- A. Stackdriver Trace

- B. Stackdriver Monitoring

- C. Stackdriver Debug Snapshots

- D. Stackdriver Debug Logpoints

Answer: D

Reference:

– GCP Stackdriver Tutorial : Debug Snapshots, Traces, Logging and Logpoints | by Romin Irani

Question 023

You want to use the Stackdriver Logging Agent to send an application’s log file to Stackdriver from a Compute Engine virtual machine instance.

After installing the Stackdriver Logging Agent, what should you do first?

- A. Enable the Error Reporting API on the project.

- B. Grant the instance full access to all Cloud APIs.

- C. Configure the application log file as a custom source.

- D. Create a Stackdriver Logs Export Sink with a filter that matches the application’s log entries.

Answer: C

Question 024

Your company has a BigQuery data mart that provides analytics information to hundreds of employees.

One user wants to run jobs without interrupting important workloads. This user isn’t concerned about the time it takes to run these jobs. You want to fulfill this request while minimizing cost to the company and the effort required on your part.

What should you do?

- A. Ask the user to run the jobs as batch jobs.

- B. Create a separate project for the user to run jobs.

- C. Add the user as a job.user role in the existing project.

- D. Allow the user to run jobs when important workloads are not running.

Answer: A

Question 025

You want to notify on-call engineers about a service degradation in production while minimizing development time.

What should you do?

- A. Use Cloud Functions to monitor resources and raise alerts.

- B. Use Cloud Pub/Sub to monitor resources and raise alerts.

- C. Use Stackdriver Error Reporting to capture errors and raise alerts.

- D. Use Stackdriver Monitoring to monitor resources and raise alerts.

Answer: D

Question 026

You are writing a single-page web application with a user-interface that communicates with a third-party API for content using XMLHttpRequest.

The data displayed on the UI by the API results is less critical than other data displayed on the same web page, so it is acceptable for some requests to not have the API data displayed in the UI. However, calls made to the API should not delay rendering of other parts of the user interface. You want your application to perform well when the API response is an error or a timeout.

What should you do?

- A. Set the asynchronous option for your requests to the API to false and omit the widget displaying the API results when a timeout or error is encountered.

- B. Set the asynchronous option for your request to the API to true and omit the widget displaying the API results when a timeout or error is encountered.

- C. Catch timeout or error exceptions from the API call and keep trying with exponential backoff until the API response is successful.

- D. Catch timeout or error exceptions from the API call and display the error response in the UI widget.

Answer: B

Question 027

You are creating a web application that runs in a Compute Engine instance and writes a file to any user’s Google Drive.

You need to configure the application to authenticate to the Google Drive API.

What should you do?

- A. Use an OAuth Client ID that uses the https://www.googleapis.com/auth/drive.file scope to obtain an access token for each user.

- B. Use an OAuth Client ID with delegated domain-wide authority.

- C. Use the App Engine service account and https://www.googleapis.com/auth/drive.file scope to generate a signed JSON Web Token (JWT).

- D. Use the App Engine service account with delegated domain-wide authority.

Answer: A

Reference:

– API-specific authorization and authentication information | Google Drive

Question 028

You are creating a Google Kubernetes Engine (GKE) cluster and run this command:

> gcloud container clusters create large-cluster --num-nodes 200The command fails with the error:

insufficient regional quota to satisfy request: resource “CPUS”: request requires ‘200.0’ and is short ‘176.0’ project has quota of ‘24.0’ with ‘24.0’ availableYou want to resolve the issue. What should you do?

- A. Request additional GKE quota in the GCP Console.

- B. Request additional Compute Engine quota in the GCP Console.

- C. Open a support case to request additional GKE quota.

- D. Decouple services in the cluster, and rewrite new clusters to function with fewer cores.

Answer: B

Question 029

You are parsing a log file that contains three columns: a timestamp, an account number (a string), and a transaction amount (a number).

You want to calculate the sum of all transaction amounts for each unique account number efficiently.

Which data structure should you use?

- A. A linked list

- B. A hash table

- C. A two-dimensional array

- D. A comma-delimited string

Answer: B

Question 030

Your company has a BigQuery dataset named “Master” that keeps information about employee travel and expenses.

This information is organized by employee department. That means employees should only be able to view information for their department. You want to apply a security framework to enforce this requirement with the minimum number of steps.

What should you do?

- A. Create a separate dataset for each department. Create a view with an appropriate WHERE clause to select records from a particular dataset for the specific department. Authorize this view to access records from your Master dataset. Give employees the permission to this department-specific dataset.

- B. Create a separate dataset for each department. Create a data pipeline for each department to copy appropriate information from the Master dataset to the specific dataset for the department. Give employees the permission to this department-specific dataset.

- C. Create a dataset named Master dataset. Create a separate view for each department in the Master dataset. Give employees access to the specific view for their department.

- D. Create a dataset named Master dataset. Create a separate table for each department in the Master dataset. Give employees access to the specific table for their department.

Answer: C

Question 031

You have an application in production.

It is deployed on Compute Engine virtual machine instances controlled by a managed instance group. Traffic is routed to the instances via a HTTP(s) load balancer. Your users are unable to access your application. You want to implement a monitoring technique to alert you when the application is unavailable.

Which technique should you choose?

- A. Smoke tests

- B. Stackdriver uptime checks

- C. Cloud Load Balancing – heath checks

- D. Managed instance group – heath checks

Answer: B

Reference:

– Stackdriver Monitoring Automation Part 3: Uptime Checks | by Charles | Google Cloud – Community | Medium

Question 032

You are load testing your server application.

During the first 30 seconds, you observe that a previously inactive Cloud Storage bucket is now servicing 2000 write requests per second and 7500 read requests per second. Your application is now receiving intermittent 5xx and 429 HTTP responses from the Cloud Storage JSON API as the demand escalates. You want to decrease the failed responses from the Cloud Storage API.

What should you do?

- A. Distribute the uploads across a large number of individual storage buckets.

- B. Use the XML API instead of the JSON API for interfacing with Cloud Storage.

- C. Pass the HTTP response codes back to clients that are invoking the uploads from your application.

- D. Limit the upload rate from your application clients so that the dormant bucket’s peak request rate is reached more gradually.

Answer: D

Reference:

– Request rate and access distribution guidelines | Cloud Storage

Question 033

Your application is controlled by a managed instance group.

You want to share a large read-only data set between all the instances in the managed instance group. You want to ensure that each instance can start quickly and can access the data set via its filesystem with very low latency. You also want to minimize the total cost of the solution.

What should you do?

- A. Move the data to a Cloud Storage bucket, and mount the bucket on the filesystem using Cloud Storage FUSE.

- B. Move the data to a Cloud Storage bucket, and copy the data to the boot disk of the instance via a startup script.

- C. Move the data to a Compute Engine persistent disk, and attach the disk in read-only mode to multiple Compute Engine virtual machine instances.

- D. Move the data to a Compute Engine persistent disk, take a snapshot, create multiple disks from the snapshot, and attach each disk to its own instance.

Answer: C

Question 034

You are developing an HTTP API hosted on a Compute Engine virtual machine instance that needs to be invoked by multiple clients within the same Virtual Private Cloud (VPC).

You want clients to be able to get the IP address of the service.

What should you do?

- A. Reserve a static external IP address and assign it to an HTTP(S) load balancing service’s forwarding rule. Clients should use this IP address to connect to the service.

- B. Reserve a static external IP address and assign it to an HTTP(S) load balancing service’s forwarding rule. Then, define an A record in Cloud DNS. Clients should use the name of the A record to connect to the service.

- C. Ensure that clients use Compute Engine internal DNS by connecting to the instance name with the url https://[INSTANCE_NAME].[ZONE].c. [PROJECT_ID].internal/.

- D. Ensure that clients use Compute Engine internal DNS by connecting to the instance name with the url https://[API_NAME]/[API_VERSION]/.

Answer: C

Question 035

Your application is logging to Stackdriver.

You want to get the count of all requests on all /api/alpha/* endpoints.

What should you do?

- A. Add a Stackdriver counter metric for path:/api/alpha/.

- B. Add a Stackdriver counter metric for endpoint:/api/alpha/*.

- C. Export the logs to Cloud Storage and count lines matching /api/alpha.

- D. Export the logs to Cloud Pub/Sub and count lines matching /api/alpha.

Answer: B

Question 036

You want to re-architect a monolithic application so that it follows a microservices model.

You want to accomplish this efficiently while minimizing the impact of this change to the business.

Which approach should you take?

- A. Deploy the application to Compute Engine and turn on autoscaling.

- B. Replace the application’s features with appropriate microservices in phases.

- C. Refactor the monolithic application with appropriate microservices in a single effort and deploy it.

- D. Build a new application with the appropriate microservices separate from the monolith and replace it when it is complete.

Answer: B

Reference:

– Migrating a monolithic application to microservices on Google Kubernetes Engine

Question 037

Your existing application keeps user state information in a single MySQL database.

This state information is very user-specific and depends heavily on how long a user has been using an application. The MySQL database is causing challenges to maintain and enhance the schema for various users.

Which storage option should you choose?

- A. Cloud SQL

- B. Cloud Storage

- C. Cloud Spanner

- D. Cloud Datastore/Firestore

Answer: D

Reference:

– Database Migration Service | Google Cloud

Question 038

You are building a new API.

You want to minimize the cost of storing and reduce the latency of serving images.

Which architecture should you use?

- A. App Engine backed by Cloud Storage

- B. Compute Engine backed by Persistent Disk

- C. Transfer Appliance backed by Cloud Filestore

- D. Cloud Content Delivery Network (CDN) backed by Cloud Storage

Answer: D

Question 039

Your company’s development teams want to use Cloud Build in their projects to build and push Docker images to Container Registry.

The operations team requires all Docker images to be published to a centralized, securely managed Docker registry that the operations team manages.

What should you do?

- A. Use Container Registry to create a registry in each development team’s project. Configure the Cloud Build build to push the Docker image to the project’s registry. Grant the operations team access to each development team’s registry.

- B. Create a separate project for the operations team that has Container Registry configured. Assign appropriate permissions to the Cloud Build service account in each developer team’s project to allow access to the operation team’s registry.

- C. Create a separate project for the operations team that has Container Registry configured. Create a Service Account for each development team and assign the appropriate permissions to allow it access to the operations team’s registry. Store the service account key file in the source code repository and use it to authenticate against the operations team’s registry.

- D. Create a separate project for the operations team that has the open source Docker Registry deployed on a Compute Engine virtual machine instance. Create a username and password for each development team. Store the username and password in the source code repository and use it to authenticate against the operations team’s Docker registry.

Answer: B

Reference:

– Container Registry | Google Cloud

Question 040

You are planning to deploy your application in a Google Kubernetes Engine (GKE) cluster.

Your application can scale horizontally, and each instance of your application needs to have a stable network identity and its own persistent disk.

Which GKE object should you use?

- A. Deployment

- B. StatefulSet

- C. ReplicaSet

- D. ReplicationController

Answer: B

Reference:

– StatefulSet | Google Kubernetes Engine(GKE) | Google Cloud

– Chapter 10. StatefulSets: deploying replicated stateful applications – Kubernetes in Action

Question 041

You are using Cloud Build to build a Docker image.

You need to modify the build to execute units and run integration tests. When there is a failure, you want the build history to clearly display the stage at which the build failed.

What should you do?

- A. Add RUN commands in the Dockerfile to execute unit and integration tests.

- B. Create a Cloud Build build config file with a single build step to compile unit and integration tests.

- C. Create a Cloud Build build config file that will spawn a separate cloud build pipeline for unit and integration tests.

- D. Create a Cloud Build build config file with separate cloud builder steps to compile and execute unit and integration tests.

Answer: D

Question 042

Your code is running on Cloud Functions in project A.

It is supposed to write an object in a Cloud Storage bucket owned by project B. However, the write call is failing with the error “403 Forbidden”.

What should you do to correct the problem?

- A. Grant your user account the roles/storage.objectCreator role for the Cloud Storage bucket.

- B. Grant your user account the roles/iam.serviceAccountUser role for the service-PROJECTA@gcf-admin-robot.iam.gserviceaccount.com service account.

- C. Grant the service-PROJECTA@gcf-admin-robot.iam.gserviceaccount.com service account the roles/storage.objectCreator role for the Cloud Storage bucket.

- D. Enable the Cloud Storage API in project B.

Answer: C

Question 043

For this question, refer to the HipLocal case study.

HipLocal’s .net-based auth service fails under intermittent load.

What should they do?

- A. Use App Engine for autoscaling.

- B. Use Cloud Functions for autoscaling.

- C. Use a Compute Engine cluster for the service.

- D. Use a dedicated Compute Engine virtual machine instance for the service.

Answer: C

Reference:

– Autoscaling an Instance Group with Custom Cloud Monitoring Metrics

Question 044

For this question, refer to the HipLocal case study.

HipLocal’s APIs are having occasional application failures.

They want to collect application information specifically to troubleshoot the issue.

What should they do?

- A. Take frequent snapshots of the virtual machines.

- B. Install the Cloud Logging agent on the virtual machines.

- C. Install the Cloud Monitoring agent on the virtual machines.

- D. Use Cloud Trace to look for performance bottlenecks.

Answer: B

Question 045

For this question, refer to the HipLocal case study.

HipLocal has connected their Hadoop infrastructure to GCP using Cloud Interconnect in order to query data stored on persistent disks.

Which IP strategy should they use?

- A. Create manual subnets.

- B. Create an auto mode subnet.

- C. Create multiple peered VPCs.

- D. Provision a single instance for NAT.

Answer: A

Question 046

For this question, refer to the HipLocal case study.

Which service should HipLocal use to enable access to internal apps?

- A. Cloud VPN

- B. Cloud Armor

- C. Virtual Private Cloud

- D. Cloud Identity-Aware Proxy

Answer: D

Reference:

– Overview of IAP for on-premises apps | Identity-Aware Proxy | Google Cloud

Question 047

For this question, refer to the HipLocal case study.

HipLocal wants to reduce the number of on-call engineers and eliminate manual scaling.

Which two services should they choose? (Choose two.)

- A. Use Google App Engine services.

- B. Use serverless Google Cloud Functions.

- C. Use Knative to build and deploy serverless applications.

- D. Use Google Kubernetes Engine for automated deployments.

- E. Use a large Google Compute Engine cluster for deployments.

Answer: C, D

Question 048

For this question, refer to the HipLocal case study.

In order to meet their business requirements, how should HipLocal store their application state?

- A. Use local SSDs to store state.

- B. Put a memcache layer in front of MySQL.

- C. Move the state storage to Cloud Spanner.

- D. Replace the MySQL instance with Cloud SQL.

Answer: D

Question 049

For this question, refer to the HipLocal case study.

Which service should HipLocal use for their public APIs?

- A. Cloud Armor

- B. Cloud Functions

- C. Cloud Endpoints

- D. Shielded Virtual Machines

Answer: C

Question 050

For this question, refer to the HipLocal case study.

HipLocal wants to improve the resilience of their MySQL deployment, while also meeting their business and technical requirements.

Which configuration should they choose?

- A. Use the current single instance MySQL on Compute Engine and several read-only MySQL servers on Compute Engine.

- B. Use the current single instance MySQL on Compute Engine, and replicate the data to Cloud SQL in an external master configuration.

- C. Replace the current single instance MySQL instance with Cloud SQL, and configure high availability.

- D. Replace the current single instance MySQL instance with Cloud SQL, and Google provides redundancy without further configuration.

Answer: C

Question 051

Your application is running in multiple Google Kubernetes Engine clusters.

It is managed by a Deployment in each cluster. The Deployment has created multiple replicas of your Pod in each cluster. You want to view the logs sent to stdout for all of the replicas in your Deployment in all clusters.

Which command should you use?

- A. kubectl logs [PARAM]

- B. gcloud logging read [PARAM]

- C. kubectl exec “it [PARAM] journalctl

- D. gcloud compute ssh [PARAM] “-command= sudo journalctl

Answer: B

Question 052

You are using Cloud Build to create a new Docker image on each source code committed to a Cloud Source Repositories repository.

Your application is built on every commit to the master branch. You want to release specific commits made to the master branch in an automated method.

What should you do?

- A. Manually trigger the build for new releases.

- B. Create a build trigger on a Git tag pattern. Use a Git tag convention for new releases.

- C. Create a build trigger on a Git branch name pattern. Use a Git branch naming convention for new releases.

- D. Commit your source code to a second Cloud Source Repositories repository with a second Cloud Build trigger. Use this repository for new releases only.

Answer: B

Reference:

– Set up Automated Builds

Question 053

You are designing a schema for a table that will be moved from MySQL to Cloud Bigtable.

The MySQL table is as follows:

AccountActivity

(

Account id int,

Event_timestamp datetime,

Transction_type string,

Amount numeric(18, 4)

) primary key (Account_id, Event_timestamp)How should you design a row key for Cloud Bigtable for this table?

- A. Set Account_id as a key.

- B. Set Account_id_Event_timestamp as a key.

- C. Set Event_timestamp_Account_id as a key.

- D. Set Event_timestamp as a key.

Answer: B

Question 054

You want to view the memory usage of your application deployed on Compute Engine.

What should you do?

- A. Install the Stackdriver Client Library.

- B. Install the Stackdriver Monitoring Agent.

- C. Use the Stackdriver Metrics Explorer.

- D. Use the Google Cloud Platform Console.

Answer: B

Reference:

– Google Cloud Platform: how to monitor memory usage of VM instances – Stack Overflow

Question 055

You have an analytics application that runs hundreds of queries on BigQuery every few minutes using BigQuery API.

You want to find out how much time these queries take to execute.

What should you do?

- A. Use Stackdriver Monitoring to plot slot usage.

- B. Use Stackdriver Trace to plot API execution time.

- C. Use Stackdriver Trace to plot query execution time.

- D. Use Stackdriver Monitoring to plot query execution times.

Answer: D

Question 056

You are designing a schema for a Cloud Spanner customer database.

You want to store a phone number array field in a customer table. You also want to allow users to search customers by phone number.

How should you design this schema?

- A. Create a table named Customers. Add an Array field in a table that will hold phone numbers for the customer.

- B. Create a table named Customers. Create a table named Phones. Add a CustomerId field in the Phones table to find the CustomerId from a phone number.

- C. Create a table named Customers. Add an Array field in a table that will hold phone numbers for the customer. Create a secondary index on the Array field.

- D. Create a table named Customers as a parent table. Create a table named Phones, and interleave this table into the Customer table. Create an index on the phone number field in the Phones table.

Answer: D

Question 057

You are deploying a single website on App Engine that needs to be accessible via the URL http://www.altostrat.com/.

What should you do?

- A. Verify domain ownership with Webmaster Central. Create a DNS CNAME record to point to the App Engine canonical name ghs.googlehosted.com.

- B. Verify domain ownership with Webmaster Central. Define an A record pointing to the single global App Engine IP address.

- C. Define a mapping in dispatch.yaml to point the domain www.altostrat.com to your App Engine service. Create a DNS CNAME record to point to the App Engine canonical name ghs.googlehosted.com.

- D. Define a mapping in dispatch.yaml to point the domain www.altostrat.com to your App Engine service. Define an A record pointing to the single global App Engine IP address.

Answer: A

Reference:

– Mapping custom domains | Google App Engine flexible environment docs

Question 058

You are running an application on App Engine that you inherited.

You want to find out whether the application is using insecure binaries or is vulnerable to XSS attacks.

Which service should you use?

- A. Cloud Amor

- B. Stackdriver Debugger

- C. Cloud Security Scanner

- D. Stackdriver Error Reporting

Answer: C

Reference:

– Security Command Center | Google Cloud

Question 059

You are working on a social media application.

You plan to add a feature that allows users to upload images. These images will be 2 MB `” 1 GB in size. You want to minimize their infrastructure operations overhead for this feature.

What should you do?

- A. Change the application to accept images directly and store them in the database that stores other user information.

- B. Change the application to create signed URLs for Cloud Storage. Transfer these signed URLs to the client application to upload images to Cloud Storage.

- C. Set up a web server on GCP to accept user images and create a file store to keep uploaded files. Change the application to retrieve images from the file store.

- D. Create a separate bucket for each user in Cloud Storage. Assign a separate service account to allow write access on each bucket. Transfer service account credentials to the client application based on user information. The application uses this service account to upload images to Cloud Storage.

Answer: B

Reference:

– Uploading images directly to Cloud Storage by using Signed URL | Google Cloud Blog

Question 060

Your application is built as a custom machine image.

You have multiple unique deployments of the machine image. Each deployment is a separate managed instance group with its own template. Each deployment requires a unique set of configuration values. You want to provide these unique values to each deployment but use the same custom machine image in all deployments. You want to use out-of-the-box features of Compute Engine.

What should you do?

- A. Place the unique configuration values in the persistent disk.

- B. Place the unique configuration values in a Cloud Bigtable table.

- C. Place the unique configuration values in the instance template startup script.

- D. Place the unique configuration values in the instance template instance metadata.

Answer: D

Reference:

– Instance groups | Compute Engine Documentation | Google Cloud

Question 061

Your application performs well when tested locally, but it runs significantly slower after you deploy it to a Compute Engine instance.

You need to diagnose the problem.

What should you do?

- A. File a ticket with Cloud Support indicating that the application performs faster locally.

- B. Use Cloud Debugger snapshots to look at a point-in-time execution of the application.

- C. Use Cloud Profiler to determine which functions within the application take the longest amount of time.

- D. Add logging commands to the application and use Cloud Logging to check where the latency problem occurs.

Answer: C

Question 062

You have an application running in App Engine.

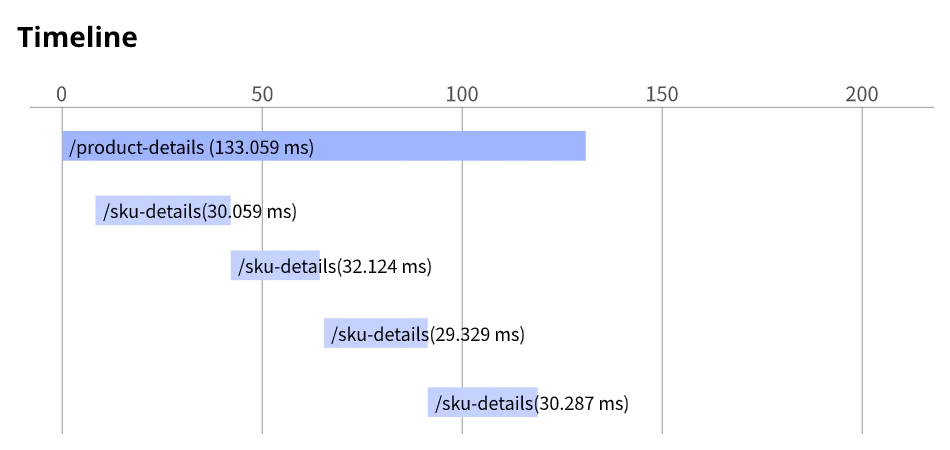

Your application is instrumented with Stackdriver Trace. The /product-details request reports details about four known unique products at /sku-details as shown below. You want to reduce the time it takes for the request to complete.

What should you do?

- A. Increase the size of the instance class.

- B. Change the Persistent Disk type to SSD.

- C. Change /product-details to perform the requests in parallel.

- D. Store the /sku-details information in a database, and replace the webservice call with a database query.

Answer: C

Question 063

Your company has a data warehouse that keeps your application information in BigQuery.

The BigQuery data warehouse keeps 2 PBs of user data. Recently, your company expanded your user base to include EU users and needs to comply with these requirements:

– Your company must be able to delete all user account information upon user request.

– All EU user data must be stored in a single region specifically for EU users.

Which two actions should you take? (Choose two.)

- A. Use BigQuery federated queries to query data from Cloud Storage.

- B. Create a dataset in the EU region that will keep information about EU users only.

- C. Create a Cloud Storage bucket in the EU region to store information for EU users only.

- D. Re-upload your data using to a Cloud Dataflow pipeline by filtering your user records out.

- E. Use DML statements in BigQuery to update/delete user records based on their requests.

Answer: B, E

Reference:

– What is BigQuery? | Google Cloud

Question 064

Your App Engine standard configuration is as follows:

– service: production

– instance_class: B1

You want to limit the application to 5 instances.

Which code snippet should you include in your configuration?

- A. manual_scaling: instances: 5 min_pending_latency: 30ms

- B. manual_scaling: max_instances: 5 idle_timeout: 10m

- C. basic_scaling: instances: 5 min_pending_latency: 30ms

- D. basic_scaling: max_instances: 5 idle_timeout: 10m

Answer: D

Question 065

Your analytics system executes queries against a BigQuery dataset.

The SQL query is executed in batch and passes the contents of a SQL file to the BigQuery CLI. Then it redirects the BigQuery CLI output to another process. However, you are getting a permission error from the BigQuery CLI when the queries are executed. You want to resolve the issue.

What should you do?

- A. Grant the service account BigQuery Data Viewer and BigQuery Job User roles.

- B. Grant the service account BigQuery Data Editor and BigQuery Data Viewer roles.

- C. Create a view in BigQuery from the SQL query and SELECT* from the view in the CLI.

- D. Create a new dataset in BigQuery, and copy the source table to the new dataset Query the new dataset and table from the CLI.

Answer: A

Question 066

Your application is running on Compute Engine and is showing sustained failures for a small number of requests.

You have narrowed the cause down to a single Compute Engine instance, but the instance is unresponsive to SSH.

What should you do next?

- A. Reboot the machine.

- B. Enable and check the serial port output.

- C. Delete the machine and create a new one.

- D. Take a snapshot of the disk and attach it to a new machine.

Answer: B

Question 067

You configured your Compute Engine instance group to scale automatically according to overall CPU usage.

However, your application’s response latency increases sharply before the cluster has finished adding up instances. You want to provide a more consistent latency experience for your end users by changing the configuration of the instance group autoscaler.

Which two configuration changes should you make? (Choose two.)

- A. Add the label AUTOSCALE to the instance group template.

- B. Decrease the cool-down period for instances added to the group.

- C. Increase the target CPU usage for the instance group autoscaler.

- D. Decrease the target CPU usage for the instance group autoscaler.

- E. Remove the health-check for individual VMs in the instance group.

Answer: B, D

Question 068

You have an application controlled by a managed instance group.

When you deploy a new version of the application, costs should be minimized and the number of instances should not increase. You want to ensure that, when each new instance is created, the deployment only continues if the new instance is healthy.

What should you do?

- A. Perform a rolling-action with maxSurge set to 1, maxUnavailable set to 0.

- B. Perform a rolling-action with maxSurge set to 0, maxUnavailable set to 1

- C. Perform a rolling-action with maxHealthy set to 1, maxUnhealthy set to 0.

- D. Perform a rolling-action with maxHealthy set to 0, maxUnhealthy set to 1.

Answer: B

Reference:

– Automatically apply VM configuration updates in a MIG | Compute Engine Documentation | Google Cloud

Question 069

Your application requires service accounts to be authenticated to GCP products via credentials stored on its host Compute Engine virtual machine instances.

You want to distribute these credentials to the host instances as securely as possible.

What should you do?

- A. Use HTTP signed URLs to securely provide access to the required resources.

- B. Use the instance’s service account Application Default Credentials to authenticate to the required resources.

- C. Generate a P12 file from the GCP Console after the instance is deployed, and copy the credentials to the host instance before starting the application.

- D. Commit the credential JSON file into your application’s source repository, and have your CI/CD process package it with the software that is deployed to the instance.

Answer: B

Reference:

– Authenticate to Compute Engine – Documentation

Question 070

Your application is deployed in a Google Kubernetes Engine (GKE) cluster.

You want to expose this application publicly behind a Cloud Load Balancing HTTP(S) load balancer.

What should you do?

- A. Configure a GKE Ingress resource.

- B. Configure a GKE Service resource.

- C. Configure a GKE Ingress resource with type: LoadBalancer.

- D. Configure a GKE Service resource with type: LoadBalancer.

Answer: A

Reference:

– GKE Ingress for HTTP(S) Load Balancing | Google Kubernetes Engine (GKE)

Question 071

Your company is planning to migrate their on-premises Hadoop environment to the cloud.

Increasing storage cost and maintenance of data stored in HDFS is a major concern for your company. You also want to make minimal changes to existing data analytics jobs and existing architecture.

How should you proceed with the migration?

- A. Migrate your data stored in Hadoop to BigQuery. Change your jobs to source their information from BigQuery instead of the on-premises Hadoop environment.

- B. Create Compute Engine instances with HDD instead of SSD to save costs. Then perform a full migration of your existing environment into the new one in Compute Engine instances.

- C. Create a Cloud Dataproc cluster on Google Cloud Platform, and then migrate your Hadoop environment to the new Cloud Dataproc cluster. Move your HDFS data into larger HDD disks to save on storage costs.

- D. Create a Cloud Dataproc cluster on Google Cloud Platform, and then migrate your Hadoop code objects to the new cluster. Move your data to Cloud Storage and leverage the Cloud Dataproc connector to run jobs on that data.

Answer: D

Question 072

Your data is stored in Cloud Storage buckets.

Fellow developers have reported that data downloaded from Cloud Storage is resulting in slow API performance. You want to research the issue to provide details to the Google Cloud support team.

Which command should you run?

- A. gsutil test “o output.json gs://my-bucket

- B. gsutil perfdiag “o output.json gs://my-bucket

- C. gcloud compute scp example-instance:~/test-data “o output.json gs://my-bucket

- D. gcloud services test “o output.json gs://my-bucket

Answer: B

Reference:

– Redirecting to Google Groups

Question 073

You are using Cloud Build build to promote a Docker image to Development, Test, and Production environments.

You need to ensure that the same Docker image is deployed to each of these environments.

How should you identify the Docker image in your build?

- A. Use the latest Docker image tag.

- B. Use a unique Docker image name.

- C. Use the digest of the Docker image.

- D. Use a semantic version Docker image tag.

Answer: C

Question 074

Your company has created an application that uploads a report to a Cloud Storage bucket.

When the report is uploaded to the bucket, you want to publish a message to a Cloud Pub/Sub topic. You want to implement a solution that will take a small amount of effort to implement.

What should you do?

- A. Configure the Cloud Storage bucket to trigger Cloud Pub/Sub notifications when objects are modified.

- B. Create an App Engine application to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

- C. Create a Cloud Functions that is triggered by the Cloud Storage bucket. In the Cloud Functions, publish a message to the Cloud Pub/Sub topic.

- D. Create an application deployed in a Google Kubernetes Engine cluster to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

Answer: A

Reference:

– Pub/Sub notifications for Cloud Storage

Question 075

Your teammate has asked you to review the code below, which is adding a credit to an account balance in Cloud Datastore.

Which improvement should you suggest your teammate make?

public Entity creditAccount (long accountId, long creditAmount) {

Entity account = datastore.get

(keyFactory.newKey (accountId));

account = Entity.builder (account).set(

"balance", account.getLong ("balance") + creditAmount).build()

datastore.put (account);

return account;

}- A. Get the entity with an ancestor query.

- B. Get and put the entity in a transaction.

- C. Use a strongly consistent transactional database.

- D. Don’t return the account entity from the function.

Answer: B

Question 076

Your company stores their source code in a Cloud Source Repositories repository.

Your company wants to build and test their code on each source code commit to the repository and requires a solution that is managed and has minimal operations overhead.

Which method should they use?

- A. Use Cloud Build with a trigger configured for each source code commit.

- B. Use Jenkins deployed via the Google Cloud Marketplace, configured to watch for source code commits.

- C. Use a Compute Engine virtual machine instance with an open source continuous integration tool, configured to watch for source code commits.

- D. Use a source code commit trigger to push a message to a Cloud Pub/Sub topic that triggers an App Engine service to build the source code.

Answer: A

Question 077

You are writing a Compute Engine hosted application in project A that needs to securely authenticate to a Cloud Pub/Sub topic in project B.

What should you do?

- A. Configure the instances with a service account owned by project B. Add the service account as a Cloud Pub/Sub publisher to project A.

- B. Configure the instances with a service account owned by project A. Add the service account as a publisher on the topic.

- C. Configure Application Default Credentials to use the private key of a service account owned by project B. Add the service account as a Cloud Pub/Sub publisher to project A.

- D. Configure Application Default Credentials to use the private key of a service account owned by project A. Add the service account as a publisher on the topic.

Answer: B

Question 078

You are developing a corporate tool on Compute Engine for the finance department, which needs to authenticate users and verify that they are in the finance department.

All company employees use G Suite.

What should you do?

- A. Enable Cloud Identity-Aware Proxy on the HTTP(s) load balancer and restrict access to a Google Group containing users in the finance department. Verify the provided JSON Web Token within the application.

- B. Enable Cloud Identity-Aware Proxy on the HTTP(s) load balancer and restrict access to a Google Group containing users in the finance department. Issue client-side certificates to everybody in the finance team and verify the certificates in the application.

- C. Configure Cloud Armor Security Policies to restrict access to only corporate IP address ranges. Verify the provided JSON Web Token within the application.

- D. Configure Cloud Armor Security Policies to restrict access to only corporate IP address ranges. Issue client side certificates to everybody in the finance team and verify the certificates in the application.

Answer: A

Question 079

Your API backend is running on multiple cloud providers.

You want to generate reports for the network latency of your API.

Which two steps should you take? (Choose two.)

- A. Use Zipkin collector to gather data.

- B. Use Fluentd agent to gather data.

- C. Use Stackdriver Trace to generate reports.

- D. Use Stackdriver Debugger to generate report.

- E. Use Stackdriver Profiler to generate report.

Answer: A, C

Question 080

For this question, refer to the HipLocal case study.

Which database should HipLocal use for storing user activity?

- A. BigQuery

- B. Cloud SQL

- C. Cloud Spanner

- D. Cloud Datastore

Answer: A

Question 081

For this question, refer to the HipLocal case study.

HipLocal is configuring their access controls.

Which firewall configuration should they implement?

- A. Block all traffic on port 443.

- B. Allow all traffic into the network.

- C. Allow traffic on port 443 for a specific tag.

- D. Allow all traffic on port 443 into the network.

Answer: C

Question 082

For this question, refer to the HipLocal case study.

HipLocal’s data science team wants to analyze user reviews.

How should they prepare the data?

- A. Use the Cloud Data Loss Prevention API for redaction of the review dataset.

- B. Use the Cloud Data Loss Prevention API for de-identification of the review dataset.

- C. Use the Cloud Natural Language Processing API for redaction of the review dataset.

- D. Use the Cloud Natural Language Processing API for de-identification of the review dataset.

Answer: B

Question 083

For this question, refer to the HipLocal case study.

In order for HipLocal to store application state and meet their stated business requirements, which database service should they migrate to?

- A. Cloud Spanner

- B. Cloud Datastore

- C. Cloud Memorystore as a cache

- D. Separate Cloud SQL clusters for each region

Answer: D

Question 084

You have an application deployed in production.

When a new version is deployed, you want to ensure that all production traffic is routed to the new version of your application. You also want to keep the previous version deployed so that you can revert to it if there is an issue with the new version.

Which deployment strategy should you use?

- A. Blue/green deployment

- B. Canary deployment

- C. Rolling deployment

- D. Recreate deployment

Answer: A

Question 085

You are porting an existing Apache/MySQL/PHP application stack from a single machine to Google Kubernetes Engine.

You need to determine how to containerize the application. Your approach should follow Google-recommended best practices for availability.

What should you do?

- A. Package each component in a separate container. Implement readiness and liveness probes.

- B. Package the application in a single container. Use a process management tool to manage each component.

- C. Package each component in a separate container. Use a script to orchestrate the launch of the components.

- D. Package the application in a single container. Use a bash script as an entrypoint to the container, and then spawn each component as a background job.

Answer: A

Reference:

– Best practices for building containers | Cloud Architecture Center

Question 086

You are developing an application that will be launched on Compute Engine instances into multiple distinct projects, each corresponding to the environments in your software development process (development, QA, staging, and production).

The instances in each project have the same application code but a different configuration. During deployment, each instance should receive the application’s configuration based on the environment it serves. You want to minimize the number of steps to configure this flow.

What should you do?

- A. When creating your instances, configure a startup script using the gcloud command to determine the project name that indicates the correct environment.

- B. In each project, configure a metadata key environment whose value is the environment it serves. Use your deployment tool to query the instance metadata and configure the application based on the environment value.

- C. Deploy your chosen deployment tool on an instance in each project. Use a deployment job to retrieve the appropriate configuration file from your version control system, and apply the configuration when deploying the application on each instance.

- D. During each instance launch, configure an instance custom-metadata key named environment whose value is the environment the instance serves. Use your deployment tool to query the instance metadata, and configure the application based on the environment value.

Answer: B

Reference:

– About VM metadata | Compute Engine Documentation | Google Cloud

Question 087

You are developing an ecommerce application that stores customer, order, and inventory data as relational tables inside Cloud Spanner.

During a recent load test, you discover that Spanner performance is not scaling linearly as expected.

Which of the following is the cause?

- A. The use of 64-bit numeric types for 32-bit numbers.

- B. The use of the STRING data type for arbitrary-precision values.

- C. The use of Version 1 UUIDs as primary keys that increase monotonically.

- D. The use of LIKE instead of STARTS_WITH keyword for parameterized SQL queries.

Answer: C

Question 088

You are developing an application that reads credit card data from a Pub/Sub subscription.

You have written code and completed unit testing. You need to test the Pub/Sub integration before deploying to Google Cloud.

What should you do?

- A. Create a service to publish messages, and deploy the Pub/Sub emulator. Generate random content in the publishing service, and publish to the emulator.

- B. Create a service to publish messages to your application. Collect the messages from Pub/Sub in production, and replay them through the publishing service.

- C. Create a service to publish messages, and deploy the Pub/Sub emulator. Collect the messages from Pub/Sub in production, and publish them to the emulator.

- D. Create a service to publish messages, and deploy the Pub/Sub emulator. Publish a standard set of testing messages from the publishing service to the emulator.

Answer: D

Question 089

You are designing an application that will subscribe to and receive messages from a single Pub/Sub topic and insert corresponding rows into a database.

Your application runs on Linux and leverages preemptible virtual machines to reduce costs. You need to create a shutdown script that will initiate a graceful shutdown.

What should you do?

- A. Write a shutdown script that uses inter-process signals to notify the application process to disconnect from the database.

- B. Write a shutdown script that broadcasts a message to all signed-in users that the Compute Engine instance is going down and instructs them to save current work and sign out.

- C. Write a shutdown script that writes a file in a location that is being polled by the application once every five minutes. After the file is read, the application disconnects from the database.

- D. Write a shutdown script that publishes a message to the Pub/Sub topic announcing that a shutdown is in progress. After the application reads the message, it disconnects from the database.

Answer: A

Reference:

– Running shutdown scripts | Compute Engine Documentation | Google Cloud

Question 090

You work for a web development team at a small startup.

Your team is developing a Node.js application using Google Cloud services, including Cloud Storage and Cloud Build. The team uses a Git repository for version control. Your manager calls you over the weekend and instructs you to make an emergency update to one of the company’s websites, and you’re the only developer available. You need to access Google Cloud to make the update, but you don’t have your work laptop. You are not allowed to store source code locally on a non-corporate computer.

How should you set up your developer environment?

- A. Use a text editor and the Git command line to send your source code updates as pull requests from a public computer.

- B. Use a text editor and the Git command line to send your source code updates as pull requests from a virtual machine running on a public computer.

- C. Use Cloud Shell and the built-in code editor for development. Send your source code updates as pull requests.

- D. Use a Cloud Storage bucket to store the source code that you need to edit. Mount the bucket to a public computer as a drive, and use a code editor to update the code. Turn on versioning for the bucket, and point it to the team’s Git repository.

Answer: C

Reference:

– Contributing to projects – GitHub Enterprise Server 3.3 Docs

Question 091

Your team develops services that run on Google Kubernetes Engine.

You need to standardize their log data using Google-recommended practices and make the data more useful in the fewest number of steps.

What should you do? (Choose two.)

- A. Create aggregated exports on application logs to BigQuery to facilitate log analytics.

- B. Create aggregated exports on application logs to Cloud Storage to facilitate log analytics.

- C. Write log output to standard output (stdout) as single-line JSON to be ingested into Cloud Logging as structured logs.

- D. Mandate the use of the Logging API in the application code to write structured logs to Cloud Logging.

- E. Mandate the use of the Pub/Sub API to write structured data to Pub/Sub and create a Dataflow streaming pipeline to normalize logs and write them to BigQuery for analytics.

Answer: A, D

Question 092

You are designing a deployment technique for your new applications on Google Cloud.

As part of your deployment planning, you want to use live traffic to gather performance metrics for both new and existing applications. You need to test against the full production load prior to launch.

What should you do?

- A. Use canary deployment.

- B. Use blue/green deployment.

- C. Use rolling updates deployment.

- D. Use A/B testing with traffic mirroring during deployment.

Answer: D

Reference:

– Application deployment and testing strategies | Cloud Architecture Center

Question 093

You support an application that uses the Cloud Storage API.

You review the logs and discover multiple HTTP 503 Service Unavailable error responses from the API. Your application logs the error and does not take any further action. You want to implement Google-recommended retry logic to improve success rates.

Which approach should you take?

- A. Retry the failures in batch after a set number of failures is logged.

- B. Retry each failure at a set time interval up to a maximum number of times.

- C. Retry each failure at increasing time intervals up to a maximum number of tries.

- D. Retry each failure at decreasing time intervals up to a maximum number of tries.

Answer: C

Question 094

You need to redesign the ingestion of audit events from your authentication service to allow it to handle a large increase in traffic.

Currently, the audit service and the authentication system run in the same Compute Engine virtual machine. You plan to use the following Google Cloud tools in the new architecture:

– Multiple Compute Engine machines, each running an instance of the authentication service

– Multiple Compute Engine machines, each running an instance of the audit service

– Pub/Sub to send the events from the authentication services.

How should you set up the topics and subscriptions to ensure that the system can handle a large volume of messages and can scale efficiently?

- A. Create one Pub/Sub topic. Create one pull subscription to allow the audit services to share the messages.

- B. Create one Pub/Sub topic. Create one pull subscription per audit service instance to allow the services to share the messages.

- C. Create one Pub/Sub topic. Create one push subscription with the endpoint pointing to a load balancer in front of the audit services.

- D. Create one Pub/Sub topic per authentication service. Create one pull subscription per topic to be used by one audit service.

- E. Create one Pub/Sub topic per authentication service. Create one push subscription per topic, with the endpoint pointing to one audit service.

Answer: A

Question 095

You are developing a marquee stateless web application that will run on Google Cloud.

The rate of the incoming user traffic is expected to be unpredictable, with no traffic on some days and large spikes on other days. You need the application to automatically scale up and down, and you need to minimize the cost associated with running the application.

What should you do?

- A. Build the application in Python with Cloud Firestore as the database. Deploy the application to Cloud Run.

- B. Build the application in C# with Cloud Firestore as the database. Deploy the application to App Engine flexible environment.

- C. Build the application in Python with Cloud SQL as the database. Deploy the application to App Engine standard environment.

- D. Build the application in Python with Cloud Firestore as the database. Deploy the application to a Compute Engine managed instance group with autoscaling.

Answer: A

Question 096

You have written a Cloud Functions that accesses other Google Cloud resources.

You want to secure the environment using the principle of least privilege.

What should you do?

- A. Create a new service account that has Editor authority to access the resources. The deployer is given permission to get the access token.

- B. Create a new service account that has a custom IAM role to access the resources. The deployer is given permission to get the access token.

- C. Create a new service account that has Editor authority to access the resources. The deployer is given permission to act as the new service account.

- D. Create a new service account that has a custom IAM role to access the resources. The deployer is given permission to act as the new service account.

Answer: D

Reference:

– Least privilege for Cloud Functions using Cloud IAM | Google Cloud Blog

Question 097

You are a SaaS provider deploying dedicated blogging software to customers in your Google Kubernetes Engine (GKE) cluster.

You want to configure a secure multi-tenant platform to ensure that each customer has access to only their own blog and can’t affect the workloads of other customers.

What should you do?

- A. Enable Application-layer Secrets on the GKE cluster to protect the cluster.

- B. Deploy a namespace per tenant and use Network Policies in each blog deployment.

- C. Use GKE Audit Logging to identify malicious containers and delete them on discovery.

- D. Build a custom image of the blogging software and use Binary Authorization to prevent untrusted image deployments.

Answer: B

Reference:

– Cluster multi-tenancy | Google Kubernetes Engine (GKE)

Question 098

You have decided to migrate your Compute Engine application to Google Kubernetes Engine.

You need to build a container image and push it to Artifact Registry using Cloud Build.

What should you do? (Choose two.)

- A. Run gcloud builds submit in the directory that contains the application source code.

- B. Run gcloud run deploy app-name –image gcr.io/$PROJECT_ID/app-name in the directory that contains the application source code.

- C. Run gcloud container images add-tag gcr.io/$PROJECT_ID/app-name gcr.io/$PROJECT_ID/app-name:latest in the directory that contains the application source code.

- D. In the application source directory, create a file named cloudbuild.yaml that contains the following contents:

name: ‘gcr.io/cloud-builders/docker’

steps:

args: [‘build’, ‘-t’, ‘gcr.io/$PROJECT_ID/app-name’, ‘.’]

name: ‘gcr.io/cloud-buliders/docker’

args: [‘push’, ‘gcr.io$PROJECT_ID/app-name’] - E. In the application source directory, create a file named cloudbuild.yaml that contains the following contents:

steps:

name: ‘gcr.io/cloud-builders/gcloud’

args: [‘app’, ‘deploy’]

timeout: ‘1600s’

Answer: A, D

Question 099

You are developing an internal application that will allow employees to organize community events within your company.

You deployed your application on a single Compute Engine instance. Your company uses Google Workspace (formerly G Suite), and you need to ensure that the company employees can authenticate to the application from anywhere.

What should you do?

- A. Add a public IP address to your instance, and restrict access to the instance using firewall rules. Allow your company’s proxy as the only source IP address.

- B. Add an HTTP(S) load balancer in front of the instance, and set up Identity-Aware Proxy (IAP). Configure the IAP settings to allow your company domain to access the website.

- C. Set up a VPN tunnel between your company network and your instance’s VPC location on Google Cloud. Configure the required firewall rules and routing information to both the on-premises and Google Cloud networks.

- D. Add a public IP address to your instance, and allow traffic from the internet. Generate a random hash, and create a subdomain that includes this hash and points to your instance. Distribute this DNS address to your company’s employees.

Answer: B

Question 100

Your development team is using Cloud Build to promote a Node.js application built on App Engine from your staging environment to production.

The application relies on several directories of photos stored in a Cloud Storage bucket named webphotos-staging in the staging environment. After the promotion, these photos must be available in a Cloud Storage bucket named webphotos-prod in the production environment. You want to automate the process where possible.

What should you do?

- A. Manually copy the photos to webphotos-prod.

- B. Add a startup script in the application’s app.yami file to move the photos from webphotos-staging to webphotos-prod.

- C. Add a build step in the cloudbuild.yaml file before the promotion step with the arguments:

name: ‘gcr.io/cloud-builders/gsutil

args: [‘cp’, ‘-r’, ‘gs://webphotos-staging’, ‘gs://webphotos-prod’]

waitFor: [‘-‘] - D. Add a build step in the cloudbuild.yaml file before the promotion step with the arguments:

name: gcr.io/cloud-builders/gcloud

args: [‘cp’, ‘-A’, ‘gs://webphotos-staging’, ‘gs://webphotos-prod’]

waitFor: [‘-‘]

Answer: C

Question 101

You are developing a web application that will be accessible over both HTTP and HTTPS and will run on Compute Engine instances.

On occasion, you will need to SSH from your remote laptop into one of the Compute Engine instances to conduct maintenance on the app.

How should you configure the instances while following Google-recommended best practices?

- A. Set up a backend with Compute Engine web server instances with a private IP address behind a TCP proxy load balancer.

- B. Configure the firewall rules to allow all ingress traffic to connect to the Compute Engine web servers, with each server having a unique external IP address.

- C. Configure Cloud Identity-Aware Proxy API for SSH access. Then configure the Compute Engine servers with private IP addresses behind an HTTP(s) load balancer for the application web traffic.

- D. Set up a backend with Compute Engine web server instances with a private IP address behind an HTTP(S) load balancer. Set up a bastion host with a public IP address and open firewall ports. Connect to the web instances using the bastion host.

Answer: C

Reference: