Ace Your Cloud Architect Practice Certification with Practice Exams.

Google Cloud Certified – Professional Cloud Architect Practice Exam (100 Q)

QUESTION 1

For this question, refer to the JencoMart Games case study.

The JencoMart security team requires that all Google Cloud Platform infrastructure is deployed using a least privilege model with separation of duties for administration between production and development resources.

What Google domain and project structure should you recommend ?

- A. Create two G Suite accounts to manage users: one for development/test/staging and one for production. Each account should contain one project for every application.

- B. Create two G Suite accounts to manage users: one with a single project for all development applications and one with a single project for all production applications.

- C. Create a single G Suite account to manage users with each stage of each application in its own project.

- D. Create a single G Suite account to manage users with one project for the development/test/staging environment and one project for the production environment.

Correct Answer: D

The principle of least privilege and separation of duties are concepts that, although semantically different, are intrinsically related from the standpoint of security. The intent behind both is to prevent people from having higher privilege levels than they actually need.

– Principle of Least Privilege: Users should only have the least amount of privileges required to perform their job and no more. This reduces authorization exploitation by limiting access to resources such as targets, jobs, or monitoring templates for which they are not authorized.

– Separation of Duties: Beyond limiting user privilege level, you also limit user duties, or the specific jobs they can perform. No user should be given responsibility for more than one related function. This limits the ability of a user to perform a malicious action and then cover up that action.

Reference:

– Separation of duties

QUESTION 2

For this question, refer to the JencoMart Games case study.

A few days after JencoMart migrates the user credentials database to Google Cloud Platform and shuts down the old server, the new database server stops responding to SSH connections. It is still serving database requests to the application servers correctly.

What three steps should you take to diagnose the problem ? (Choose 3 answers.)

- A. Delete the virtual machine (VM) and disks and create a new one.

- B. Delete the instance, attach the disk to a new VM, and investigate.

- C. Take a snapshot of the disk and connect to a new machine to investigate.

- D. Check inbound firewall rules for the network the machine is connected to.

- E. Connect the machine to another network with very simple firewall rules and investigate.

- F. Print the Serial Console output for the instance for troubleshooting, activate the interactive console, and investigate.

Correct Answer: C, D, F

D: Handling “Unable to connect on port 22” error message

Possible causes include:

– There is no firewall rule allowing SSH access on the port. SSH access on port 22 is enabled on all Google Compute Engine instances by default. If you have disabled access, SSH from the Browser will not work. If you run sshd on a port other than 22, you need to enable the access to that port with a custom firewall rule.

– The firewall rule allowing SSH access is enabled, but is not configured to allow connections from GCP Console services. Source IP addresses for browserbased SSH sessions are dynamically allocated by GCP Console and can vary from session to session.

F: Handling “Could not connect, retrying…” error

You can verify that the daemon is running by navigating to the serial console output page and looking for output lines prefixed with the accounts-from-metadata:

string. If you are using a standard image but you do not see these output prefixes in the serial console output, the daemon might be stopped. Reboot the instance to restart the daemon.

Reference:

– SSH from the browser

QUESTION 3

For this question, refer to the JencoMart Games case study.

JencoMart has decided to migrate user profile storage to Google Cloud Datastore and the application servers to Google Compute Engine (GCE).

During the migration, the existing infrastructure will need access to Google Cloud Datastore to upload the data.

What service account key-management strategy should you recommend ?

- A. Provision service account keys for the on-premises infrastructure and for the GCE virtual machines (VMs).

- B. Authenticate the on-premises infrastructure with a user account and provision service account keys for the VMs.

- C. Provision service account keys for the on-premises infrastructure and use Google Cloud Platform (GCP) managed keys for the VMs

- D. Deploy a custom authentication service on GCE / Google. Kubernetes Engine (GKE) for the on-premises infrastructure and use GCP managed keys for the VMs.

Correct Answer: C

Migrating data to Google Cloud Platform

Let’s say that you have some data processing that happens on another cloud provider and you want to transfer the processed data to Google Cloud Platform. You can use a service account from the virtual machines on the external cloud to push the data to Google Cloud Platform. To do this, you must create and download a service account key when you create the service account and then use that key from the external process to call the Google Cloud Platform APIs.

Reference:

– Understanding service accounts

QUESTION 4

For this question, refer to the JencoMart Games case study.

JencoMart has built a version of their application on Google Cloud Platform that serves traffic to Asia.

You want to measure success against their business and technical goals.

Which metrics should you track ?

- A. Error rates for requests from Asia.

- B. Latency difference between US and Asia.

- C. Total visits, error rates, and latency from Asia.

- D. Total visits and average latency for users from Asia.

- E. The number of character sets present in the database.

Correct Answer: D

From scenario:

Business Requirements include: Expand services into Asia.

Technical Requirements include: Decrease latency in Asia.

QUESTION 5

For this question, refer to the JencoMart Games case study.

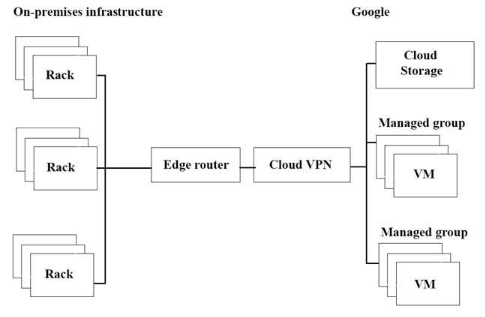

The migration of JencoMart’s application to Google Cloud Platform (GCP) is progressing too slowly. The infrastructure is shown in the diagram.

You want to maximize throughput.

What are three potential bottlenecks ? (Choose 3 answers.)

- A. A single VPN tunnel, which limits throughput.

- B. A tier of Google Cloud Storage that is not suited for this task.

- C. A copy command that is not suited to operate over long distances.

- D. Fewer virtual machines (VMs) in GCP than on-premises machines.

- E. A separate storage layer outside the VMs, which is not suited for this task.

- F. Complicated internet connectivity between the on-premises infrastructure and GCP.

Correct Answer: A, C, E

QUESTION 6

For this question, refer to the JencoMart Games case study.

JencoMart wants to move their User Profiles database to Google Cloud Platform.

Which Google Database should they use ?

- A. Google Cloud Spanner

- B. Google BigQuery

- C. Google Cloud SQL

- D. Google Cloud Datastore

Correct Answer: D

Common workloads for Google Cloud Datastore:

– User profiles

– Product catalogs

– Game state

Reference:

– Cloud storage products

– Datastore Overview

QUESTION 7

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants you to design their new testing strategy. How should the test coverage differ from their existing backends on the other platforms ?

- A. Tests should scale well beyond the prior approaches.

- B. Unit tests are no longer required, only end-to-end tests.

- C. Tests should be applied after the release is in the production environment.

- D. Tests should include directly testing the Google Cloud Platform (GCP) infrastructure.

Correct Answer: A

From Scenario:

A few of their games were more popular than expected, and they had problems scaling their application servers, MySQL databases, and analytics tools.

Requirements for Game Analytics Platform include: Dynamically scale up or down based on game activity.

QUESTION 8

For this question, refer to the Mountkirk Games case study.

Mountkirk Games has deployed their new backend on Google Cloud Platform (GCP). You want to create a through testing process for new versions of the backend before they are released to the public. You want the testing environment to scale in an economical way.

How should you design the process ?

- A. Create a scalable environment in GCP for simulating production load.

- B. Use the existing infrastructure to test the GCP-based backend at scale.

- C. Build stress tests into each component of your application using resources internal to GCP to simulate load.

- D. Create a set of static environments in GCP to test different levels of load – for example, high, medium, and low.

Correct Answer: A

From scenario: Requirements for Game Backend Platform

– Dynamically scale up or down based on game activity.

– Connect to a managed NoSQL database service.

– Run customize Linux distro.

QUESTION 9

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants to set up a continuous delivery pipeline.

Their architecture includes many small services that they want to be able to update and roll back quickly.

Mountkirk Games has the following requirements:

- Services are deployed redundantly across multiple regions in the US and Europe.

- Only frontend services are exposed on the public internet.

- They can provide a single frontend IP for their fleet of services.

- Deployment artifacts are immutable.

Which set of products should they use ?

- A. Google Cloud Storage, Google Cloud Dataflow, Google Compute Engine.

- B. Google Cloud Storage, Google App Engine, Google Network Load Balancer.

- C. Google Kubernetes Registry, Google Container Engine, Google HTTP(S) Load Balancer.

- D. Google Cloud Functions, Google Cloud Pub/Sub, Google Cloud Deployment Manager.

Correct Answer: C

QUESTION 10

For this question, refer to the Mountkirk Games case study.

Mountkirk Games’ gaming servers are not automatically scaling properly.

Last month, they rolled out a new feature, which suddenly became very popular.

A record number of users are trying to use the service, but many of them are getting 503 errors and very slow response times.

What should they investigate first ?

- A. Verify that the database is online.

- B. Verify that the project quota hasn’t been exceeded.

- C. Verify that the new feature code did not introduce any performance bugs.

- D. Verify that the load-testing team is not running their tool against production.

Correct Answer: B

503 is service unavailable error. If the database was online everyone would get the 503 error.

QUESTION 11

For this question, refer to the Mountkirk Games case study.

Mountkirk Games needs to create a repeatable and configurable mechanism for deploying isolated application environments.

Developers and testers can access each other’s environments and resources, but they cannot access staging or production resources. The staging environment needs access to some services from production.

What should you do to isolate development environments from staging and production ?

- A. Create a project for development and test and another for staging and production.

- B. Create a network for development and test and another for staging and production.

- C. Create one subnetwork for development and another for staging and production.

- D. Create one project for development, a second for staging and a third for production.

Correct Answer: D

QUESTION 12

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants to set up a real-time analytics platform for their new game.

The new platform must meet their technical requirements.

Which combination of Google technologies will meet all of their requirements ?

- A. Google Kubernetes Engine, Google Cloud Pub/Sub, and Google Cloud SQL.

- B. Google Cloud Dataflow, Google Cloud Storage, Google Cloud Pub/Sub, and Google BigQuery.

- C. Google Cloud SQL, Google Cloud Storage, Google Cloud Pub/Sub, and Google Cloud Dataflow.

- D. Google Cloud Dataproc, Google Cloud Pub/Sub, Google Cloud SQL, and Google Cloud Dataflow.

- E. Google Cloud Pub/Sub, Google Compute Engine, Google Cloud Storage, and Google Cloud Dataproc.

Correct Answer: B

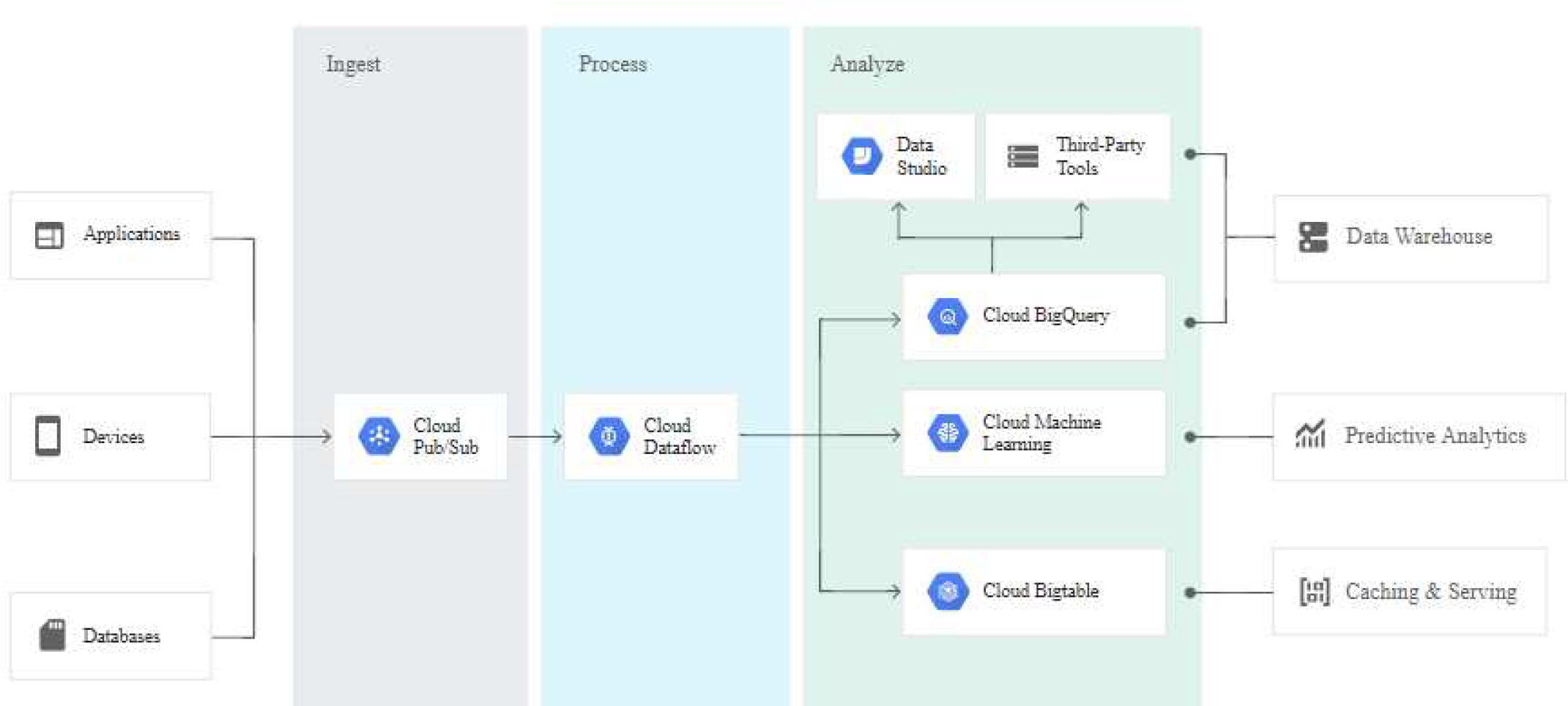

Ingest millions of streaming events per second from anywhere in the world with Google Cloud Pub/Sub, powered by Google’s unique, high-speed private network. Process the streams with Google Cloud Dataflow to ensure reliable, exactly-once, low-latency data transformation. Stream the transformed data into Google BigQuery, the cloud-native data warehousing service, for immediate analysis via SQL or popular visualization tools.

From scenario: They plan to deploy the game’s backend on Google Compute Engine so they can capture streaming metrics, run intensive analytics.

Requirements for Game Analytics Platform.

– Dynamically scale up or down based on game activity.

– Process incoming data on the fly directly from the game servers.

– Process data that arrives late because of slow mobile networks.

– Allow SQL queries to access at least 10 TB of historical data.

– Process files that are regularly uploaded by users’ mobile devices.

– Use only fully managed services.

Reference:

– Stream analytics

QUESTION 13

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants to migrate from their current analytics and statistics reporting model to one that meets their technical requirements on Google Cloud Platform.

Which two steps should be part of their migration plan ? (Choose two.)

- A. Evaluate the impact of migrating their current batch ETL code to Google Cloud Dataflow.

- B. Write a schema migration plan to denormalize data for better performance in Google BigQuery.

- C. Draw an architecture diagram that shows how to move from a single MySQL database to a MySQL cluster.

- D. Load 10 TB of analytics data from a previous game into a Google Cloud SQL instance, and run test queries against the full dataset to confirm that they complete successfully.

- E. Integrate Google Cloud Armor to defend against possible SQL injection attacks in analytics files uploaded to Google Cloud Storage.

Correct Answer: A, B

QUESTION 14

For this question, refer to the Mountkirk Games case study.

You need to analyze and define the technical architecture for the compute workloads for your company, Mountkirk Games.

Considering the Mountkirk Games business and technical requirements, what should you do ?

- A. Create network load balancers. Use preemptible Compute Engine instances.

- B. Create network load balancers. Use non-preemptible Compute Engine instances.

- C. Create a global load balancer with managed instance groups and autoscaling policies. Use preemptible Compute Engine instances.

- D. Create a global load balancer with managed instance groups and autoscaling policies. Use non-preemptible Compute Engine instances.

Correct Answer: D

QUESTION 15

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants to design their solution for the future in order to take advantage of cloud and technology improvements as they become available.

Which two steps should they take ? (Choose two.)

- A. Store as much analytics and game activity data as financially feasible today so it can be used to train machine learning models to predict user behavior in the future.

- B. Begin packaging their game backend artifacts in container images and running them on Google Kubernetes Engine to improve the availability to scale up or down based on game activity.

- C. Set up a CI/CD pipeline using Jenkins and Spinnaker to automate canary deployments and improve development velocity.

- D. Adopt a schema versioning tool to reduce downtime when adding new game features that require storing additional player data in the database.

- E. Implement a weekly rolling maintenance process for the Linux virtual machines so they can apply critical kernel patches and package updates and reduce the risk of 0-day vulnerabilities.

Correct Answer: C, E

QUESTION 16

For this question, refer to the Mountkirk Games case study.

Mountkirk Games wants you to design a way to test the analytics platform’s resilience to changes in mobile network latency.

What should you do ?

- A. Deploy failure injection software to the game analytics platform that can inject additional latency to mobile client analytics traffic.

- B. Build a test client that can be run from a mobile phone emulator on a Compute Engine virtual machine, and run multiple copies in Google Cloud Platform regions all over the world to generate realistic traffic.

- C. Add the ability to introduce a random amount of delay before beginning to process analytics files uploaded from mobile devices.

- D. Create an opt-in beta of the game that runs on players’ mobile devices and collects response times from analytics endpoints running in Google Cloud Platform regions all over the world.

Correct Answer: C

QUESTION 17

For this question, refer to the Mountkirk Games case study.

You need to analyze and define the technical architecture for the database workloads for your company, Mountkirk Games.

Considering the business and technical requirements.

What should you do ?

- A. Use Google Cloud SQL for time series data, and use Google Cloud Bigtable for historical data queries.

- B. Use Google Cloud SQL to replace MySQL, and use Google Cloud Spanner for historical data queries.

- C. Use Google Cloud Bigtable to replace MySQL, and use Google BigQuery for historical data queries.

- D. Use Google Cloud Bigtable for time series data, use Google Cloud Spanner for transactional data, and use Google BigQuery for historical data queries.

Correct Answer: C

QUESTION 18

For this question, refer to the Mountkirk Games case study.

Which managed storage option meets Mountkirk’s technical requirement for storing game activity in a time series database service ?

- A. Google Cloud Bigtable

- B. Google Cloud Spanner

- C. Google BigQuery

- D. Google Cloud Datastore

Correct Answer: A

QUESTION 19

For this question, refer to the Mountkirk Games case study.

You are in charge of the new Game Backend Platform architecture.

The game communicates with the backend over a REST API.

You want to follow Google-recommended practices.

How should you design the backend ?

- A. Create an instance template for the backend. For every region, deploy it on a multi-zone managed instance group. Use an L4 load balancer.

- B. Create an instance template for the backend. For every region, deploy it on a single-zone managed instance group. Use an L4 load balancer.

- C. Create an instance template for the backend. For every region, deploy it on a multi-zone managed instance group. Use an L7 load balancer.

- D. Create an instance template for the backend. For every region, deploy it on a single-zone managed instance group. Use an L7 load balancer.

Correct Answer: A

Reference:

– Instance templates

– TCP Proxy Load Balancing overview

QUESTION 20

For this question, refer to the TerramEarth Games case study.

TerramEarth’s CTO wants to use the raw data from connected vehicles to help identify approximately when a vehicle in the field will have a catastrophic failure.

You want to allow analysts to centrally query the vehicle data.

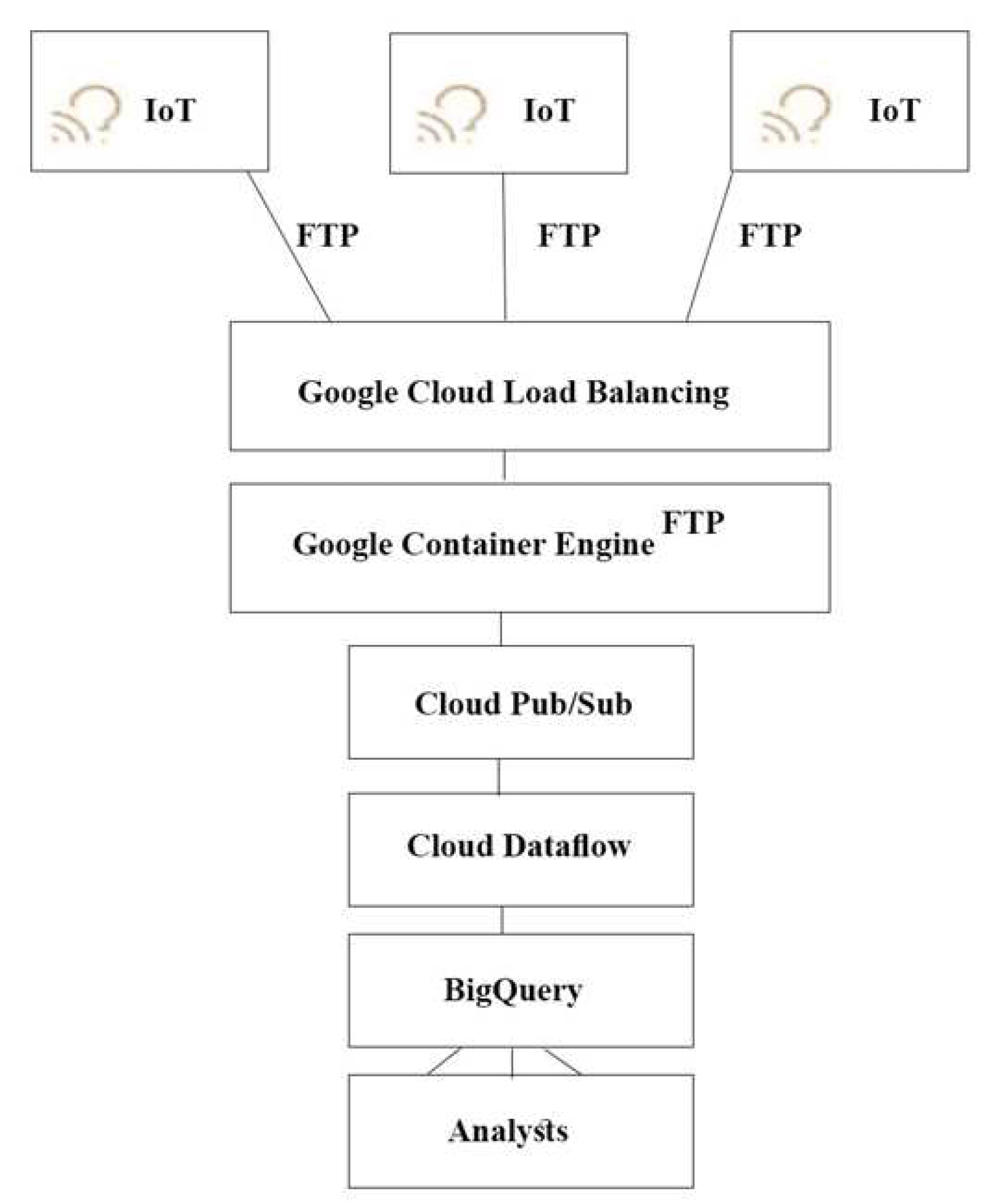

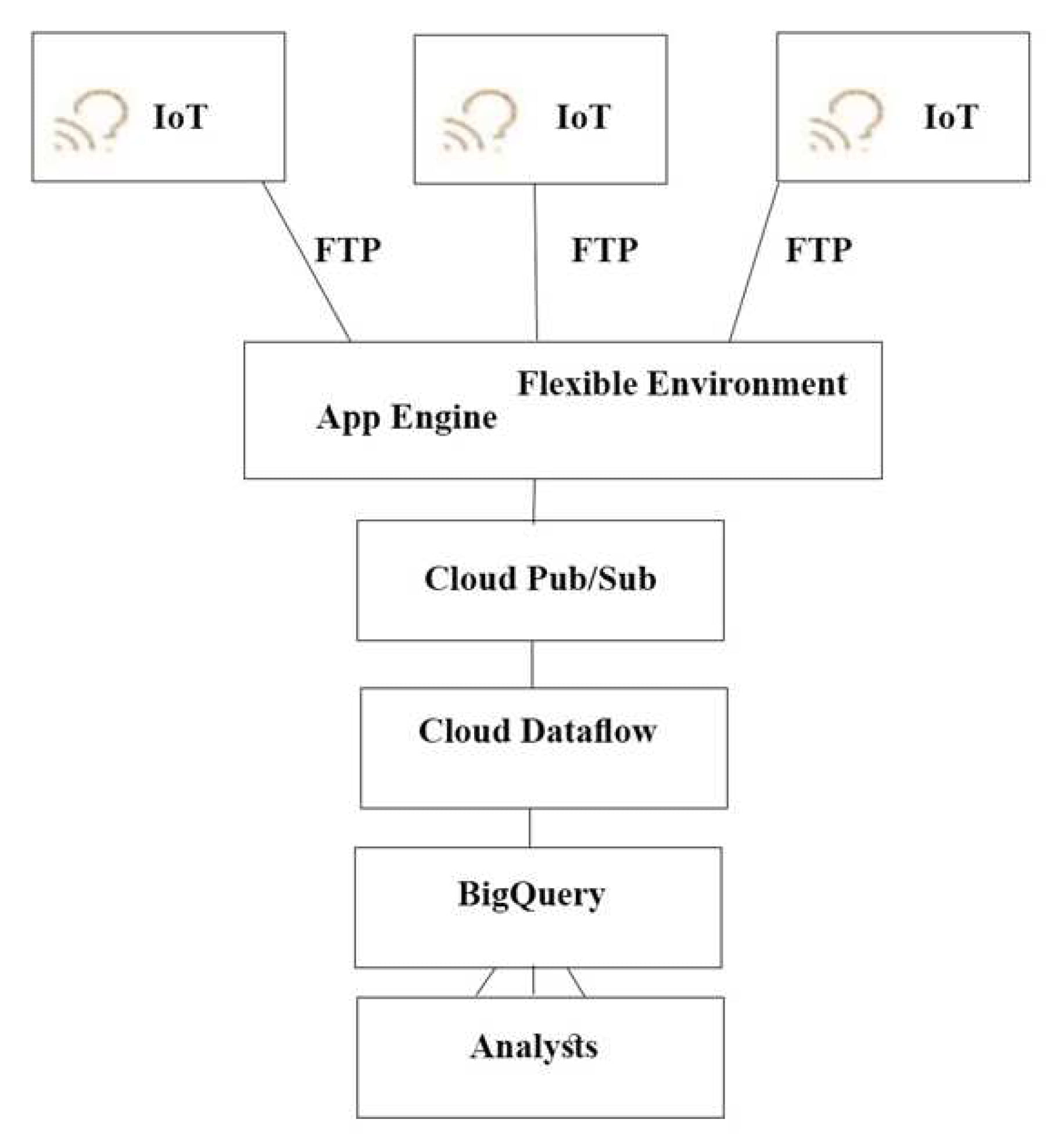

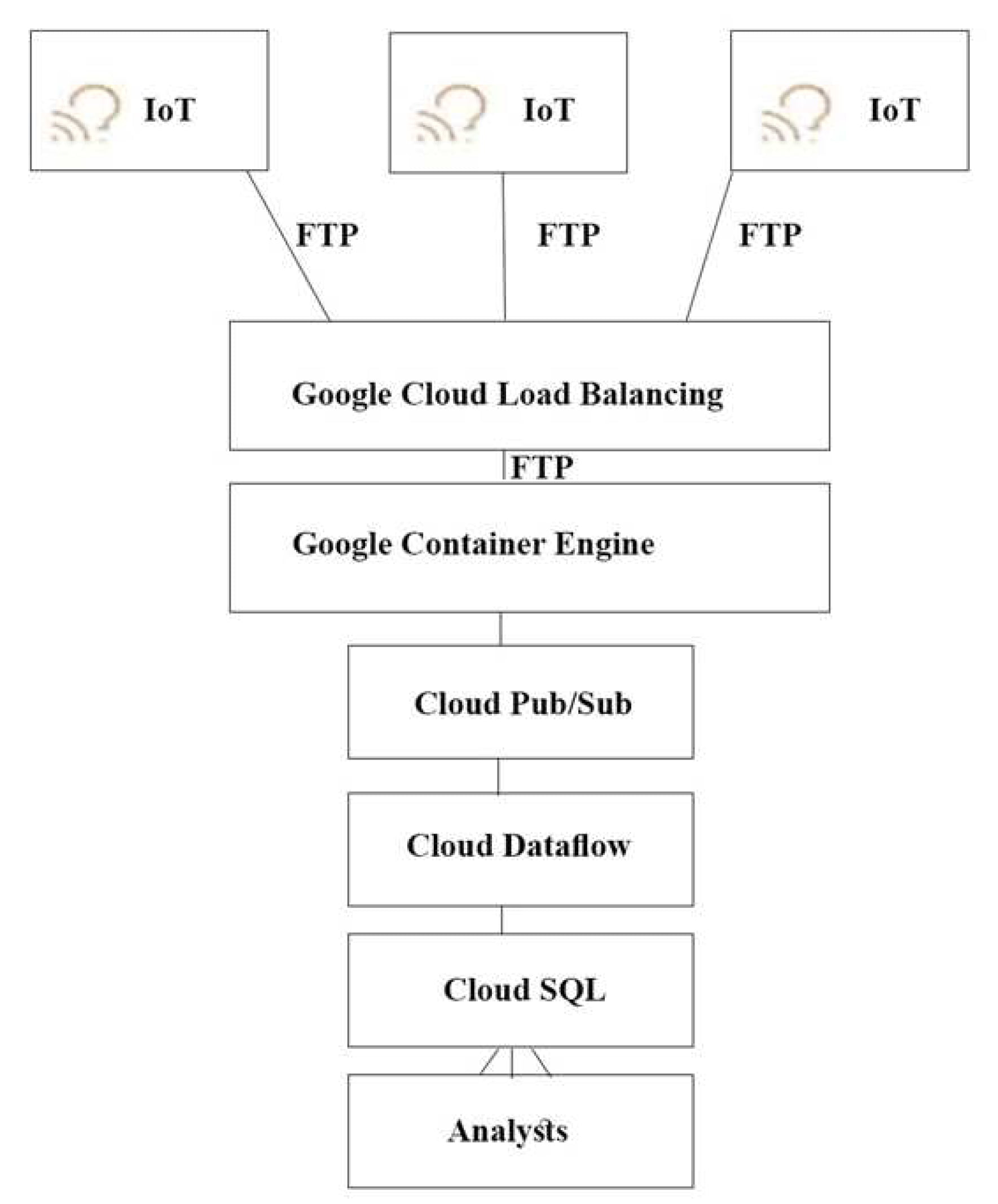

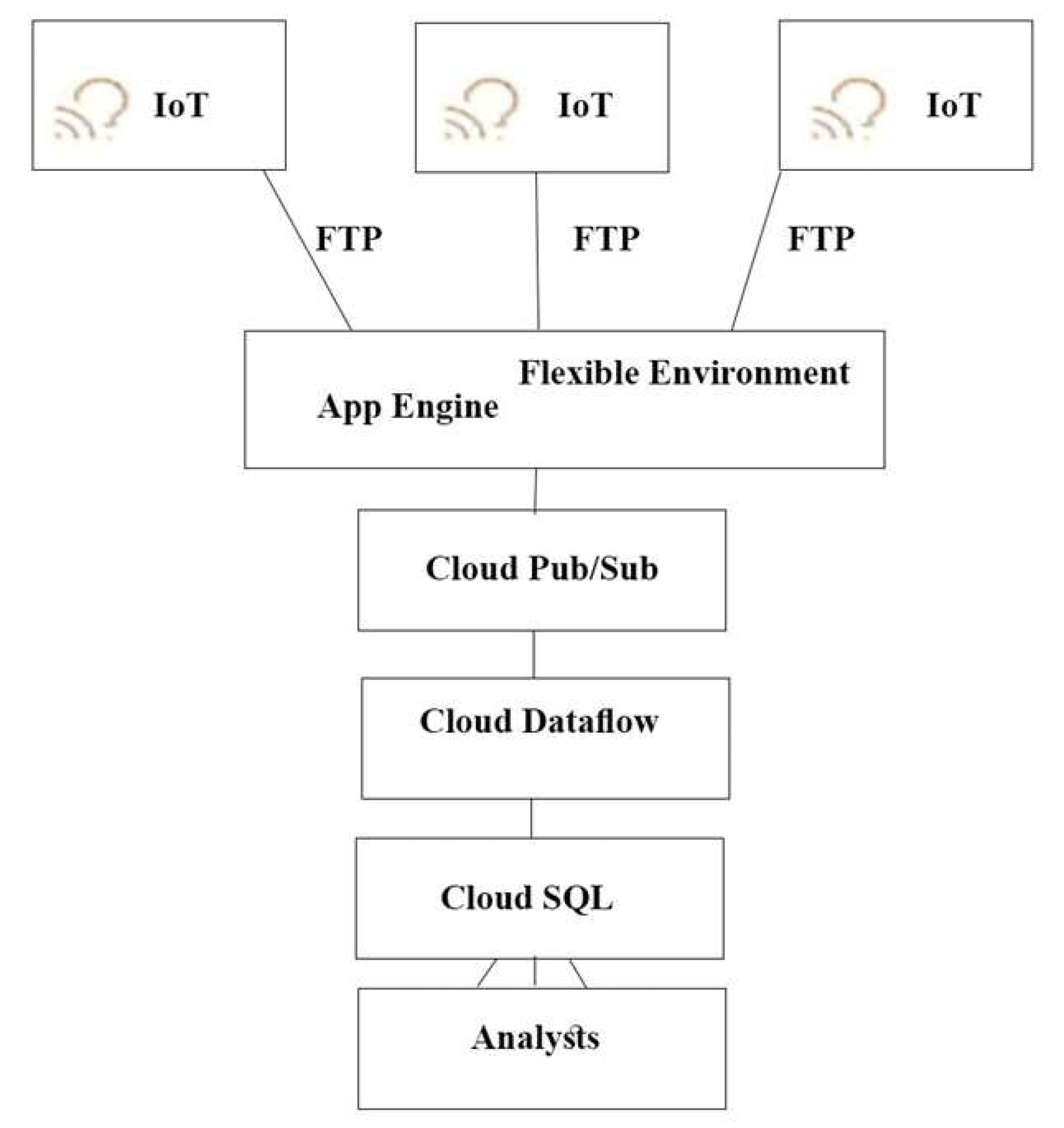

Which architecture should you recommend ?

Correct Answer: A

The push endpoint can be a load balancer.

A container cluster can be used.

Google Cloud Pub/Sub for Stream Analytics

Reference:

– Cloud Pub/Sub

– Google Cloud IoT

– Designing a Connected Vehicle Platform on Cloud IoT Core

– Designing a Connected Vehicle Platform on Cloud IoT Core: Device management

– Google Touts Value of Cloud IoT Core for Analyzing Connected Car Data

QUESTION 21

For this question, refer to the TerramEarth Games case study.

The TerramEarth development team wants to create an API to meet the company’s business requirements.

You want the development team to focus their development effort on business value versus creating a custom framework.

Which method should they use ?

- A. Use Google App Engine with Google Cloud Endpoints. Focus on an API for dealers and partners.

- B. Use Google App Engine with a JAX-RS Jersey Java-based framework. Focus on an API for the public.

- C. Use Google App Engine with the Swagger (Open API Specification) framework. Focus on an API for the public.

- D. Use Google Container Engine with a Django Python container. Focus on an API for the public.

- E. Use Google Container Engine with a Tomcat container with the Swagger (Open API Specification) framework. Focus on an API for dealers and partners.

Correct Answer: A

Develop, deploy, protect and monitor your APIs with Google Cloud Endpoints. Using an Open API Specification or one of our API frameworks, Google Cloud Endpoints gives you the tools you need for every phase of API development.

From scenario:

Business Requirements

Decrease unplanned vehicle downtime to less than 1 week, without increasing the cost of carrying surplus inventory.

Support the dealer network with more data on how their customers use their equipment to better position new products and services

Have the ability to partner with different companies – especially with seed and fertilizer suppliers in the fast-growing agricultural business – to create compelling joint offerings for their customers.

Reference:

– Cloud Endpoints

– Getting Started with Endpoints for App Engine standard environment

QUESTION 22

For this question, refer to the TerramEarth Games case study.

Your development team has created a structured API to retrieve vehicle data.

They want to allow third parties to develop tools for dealerships that use this vehicle event data.

You want to support delegated authorization against this data.

What should you do ?

- A. Build or leverage an OAuth-compatible access control system.

- B. Build SAML 2.0 SSO compatibility into your authentication system.

- C. Restrict data access based on the source IP address of the partner systems.

- D. Create secondary credentials for each dealer that can be given to the trusted third party.

Correct Answer: A

Delegate application authorization with OAuth2.

Google Cloud Platform APIs support OAuth 2.0, and scopes provide granular authorization over the methods that are supported. Google Cloud Platform supports both serviceaccount and user-account OAuth, also called three-legged OAuth.

Reference:

– Best practices for enterprise organizations

–Authentication overview

– Using OAuth 2.0 to Access Google APIs

– Setting up OAuth 2.0

QUESTION 23

For this question, refer to the TerramEarth Games case study.

TerramEarth plans to connect all 20 million vehicles in the field to the cloud.

This increases the volume to 20 million 600 byte records a second for 40 TB an hour.

How should you design the data ingestion ?

- A. Vehicles write data directly to Google Cloud Storage.

- B. Vehicles write data directly to Google Cloud Pub/Sub.

- C. Vehicles stream data directly to Google BigQuery.

- D. Vehicles continue to write data using the existing system (FTP).

Correct Answer: C

QUESTION 24

For this question, refer to the TerramEarth Games case study.

You analyzed TerramEarth’s business requirement to reduce downtime, and found that they can achieve a majority of time saving by reducing customer’s wait time for parts.

You decided to focus on reduction of the 3 weeks aggregate reporting time.

Which modifications to the company’s processes should you recommend ?

- A. Migrate from CSV to binary format, migrate from FTP to SFTP transport, and develop machine learning analysis of metrics.

- B. Migrate from FTP to streaming transport, migrate from CSV to binary format, and develop machine learning analysis of metrics.

- C. Increase fleet cellular connectivity to 80%, migrate from FTP to streaming transport, and develop machine learning analysis of metrics.

- D. Migrate from FTP to SFTP transport, develop machine learning analysis of metrics, and increase dealer local inventory by a fixed factor.

Correct Answer: C

The Avro binary format is the preferred format for loading compressed data. Avro data is faster to load because the data can be read in parallel, even when the data blocks are compressed.

Google Cloud Storage supports streaming transfers with the gsutil tool or boto library, based on HTTP chunked transfer encoding. Streaming data lets you stream data to and from your Google Cloud Storage account as soon as it becomes available without requiring that the data be first saved to a separate file. Streaming transfers are useful if you have a process that generates data and you do not want to buffer it locally before uploading it, or if you want to send the result from a computational pipeline directly into Google Cloud Storage.

Reference:

– Streaming transfers

– Introduction to loading data

QUESTION 25

For this question, refer to the TerramEarth Games case study.

Which of TerramEarth’s legacy enterprise processes will experience significant change as a result of increased Google Cloud Platform adoption ?

- A. Opex/capex allocation, LAN changes, capacity planning.

- B. Capacity planning, TCO calculations, opex/capex allocation.

- C. Capacity planning, utilization measurement, data center expansion.

- D. Data Center expansion, TCO calculations, utilization measurement.

Correct Answer: B

QUESTION 26

For this question, refer to the TerramEarth Games case study.

To speed up data retrieval, more vehicles will be upgraded to cellular connections and be able to transmit data to the ETL process.

The current FTP process is error-prone and restarts the data transfer from the start of the file when connections fail, which happens often.

You want to improve the reliability of the solution and minimize data transfer time on the cellular connections.

What should you do ?

- A. Use one Google Container Engine cluster of FTP servers. Save the data to a Multi-Regional bucket. Run the ETL process using data in the bucket

- B. Use multiple Google Container Engine clusters running FTP servers located in different regions. Save the data to Multi-Regional buckets in US, EU, and Asia. Run the ETL process using the data in the bucket.

- C. Directly transfer the files to different Google Cloud Multi-Regional Storage bucket locations in US, EU, and Asia using Google APIs over HTTP(S). Run the ETL process using the data in the bucket.

- D. Directly transfer the files to a different Google Cloud Regional Storage bucket location in US, EU, and Asia using Google APIs over HTTP(S). Run the ETL process to retrieve the data from each Regional bucket.

Correct Answer: D

QUESTION 27

For this question, refer to the TerramEarth Games case study.

TerramEarth’s 20 million vehicles are scattered around the world.

Based on the vehicle’s location, its telemetry data is stored in a Google Cloud Storage (GCS) regional bucket (US, Europe, or Asia).

The CTO has asked you to run a report on the raw telemetry data to determine why vehicles are breaking down after 100 K miles. You want to run this job on all the data.

What is the most cost-effective way to run this job ?

- A. Move all the data into 1 zone, then launch a Google Cloud Dataproc cluster to run the job.

- B. Move all the data into 1 region, then launch a Google Cloud Dataproc cluster to run the job.

- C. Launch a cluster in each region to preprocess and compress the raw data, then move the data into a multi-region bucket and use a Dataproc cluster to finish the job.

- D. Launch a cluster in each region to preprocess and compress the raw data, then move the data into a region bucket and use a Google Cloud Dataproc cluster to finis the job.

Correct Answer: D

QUESTION 28

For this question, refer to the TerramEarth Games case study.

TerramEarth has equipped all connected trucks with servers and sensors to collect telemetry data.

Next year they want to use the data to train machine learning models. They want to store this data in the cloud while reducing costs.

What should they do ?

- A. Have the vehicle’s computer compress the data in hourly snapshots, and store it in a Google Cloud Storage (GCS) Nearline bucket.

- B. Push the telemetry data in real-time to a streaming dataflow job that compresses the data, and store it in Google BigQuery.

- C. Push the telemetry data in real-time to a streaming dataflow job that compresses the data, and store it in Google Cloud Bigtable.

- D. Have the vehicle’s computer compress the data in hourly snapshots, and store it in a GCS Coldline bucket.

Correct Answer: D

Storage is the best choice for data that you plan to access at most once a year, due to its slightly lower availability, 90-day minimum storage duration, costs for data access, and higher per-operation costs.

For example:

Google Cold Data Storage – Infrequently accessed data, such as data stored for legal or regulatory reasons, can be stored at low cost as Coldline Storage, and be available when you need it.

Disaster recovery – In the event of a disaster recovery event, recovery time is key. Google Cloud Storage provides low latency access to data stored as Coldline Storage.

Reference:

– Storage classes

QUESTION 29

For this question, refer to the TerramEarth Games case study.

Your agricultural division is experimenting with fully autonomous vehicles.

You want your architecture to promote strong security during vehicle operation.

Which two architectures should you consider ? (Choose two.)

- A. Treat every micro service call between modules on the vehicle as untrusted.

- B. Require IPv6 for connectivity to ensure a secure address space.

- C. Use a trusted platform module (TPM) and verify firmware and binaries on boot.

- D. Use a functional programming language to isolate code execution cycles.

- E. Use multiple connectivity subsystems for redundancy.

- F. Enclose the vehicle’s drive electronics in a Faraday cage to isolate chips.

Correct Answer: A, C

QUESTION 30

For this question, refer to the TerramEarth Games case study.

Operational parameters such as oil pressure are adjustable on each of TerramEarth’s vehicles to increase their efficiency, depending on their environmental conditions.

Your primary goal is to increase the operating efficiency of all 20 million cellular and unconnected vehicles in the field.

How can you accomplish this goal ?

- A. Have you engineers inspect the data for patterns, and then create an algorithm with rules that make operational adjustments automatically.

- B. Capture all operating data, train machine learning models that identify ideal operations, and run locally to make operational adjustments automatically.

- C. Implement a Google Cloud Dataflow streaming job with a sliding window, and use Google Cloud Messaging (GCM) to make operational adjustments automatically.

- D. Capture all operating data, train machine learning models that identify ideal operations, and host in Google Cloud Machine Learning (ML) Platform to make operational adjustments automatically.

Correct Answer: B

QUESTION 31

For this question, refer to the TerramEarth Games case study.

To be compliant with European GDPR regulation, TerramEarth is required to delete data generated from its European customers after a period of 36 months when it contains personal data.

In the new architecture, this data will be stored in both Google Cloud Storage and Google BigQuery.

What should you do ?

- A. Create a Google BigQuery table for the European data, and set the table retention period to 36 months. For Google Cloud Storage, use gsutil to enable lifecycle management using a DELETE action with an Age condition of 36 months.

- B. Create a Google BigQuery table for the European data, and set the table retention period to 36 months. For Google Cloud Storage, use gsutil to create a SetStorageClass to NONE action when with an Age condition of 36 months.

- C. Create a Google BigQuery time-partitioned table for the European data, and set the partition expiration period to 36 months. For Cloud Storage, use gsutil to enable lifecycle management using a DELETE action with an Age condition of 36 months.

- D. Create a Google BigQuery time-partitioned table for the European data, and set the partition period to 36 months. For Google Cloud Storage, use gsutil to create a SetStorageClass to NONE action with an Age condition of 36 months.

Correct Answer: C

QUESTION 32

For this question, refer to the TerramEarth Games case study.

TerramEarth has decided to store data files in Google Cloud Storage.

You need to configure Google Cloud Storage lifecycle rule to store 1 year of data and minimize file storage cost.

Which two actions should you take ?

- A. Create a Google Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Coldline”, and create a second GCS life-cycle rule with Age: “365”, Storage Class: “Coldline”, and Action: “Delete”.

- B. Create a Google Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Coldline”, and Action: “Set to Nearline”, and create a second GCS life-cycle rule with Age: “91”, Storage Class: “Coldline”, and Action: “Set to Nearline”.

- C. Create a Google Cloud Storage lifecycle rule with Age: “90”, Storage Class: “Standard”, and Action: “Set to Nearline”, and create a second GCS life-cycle rule with Age:“91”, Storage Class: “Nearline”, and Action: “Set to Coldline”.

- D. Create a Google Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Coldline”, and create a second GCS life-cycle rule with Age: “365”, Storage Class: “Nearline”, and Action: “Delete”.

Correct Answer: A

QUESTION 33

For this question, refer to the TerramEarth Games case study.

You need to implement a reliable, scalable GCP solution for the data warehouse for your company, TerramEarth.

Considering the TerramEarth business and technical requirements, what should you do ?

- A. Replace the existing data warehouse with Google BigQuery. Use table partitioning.

- B. Replace the existing data warehouse with a Compute Engine instance with 96 CPUs.

- C. Replace the existing data warehouse with Google BigQuery. Use federated data sources.

- D. Replace the existing data warehouse with a Compute Engine instance with 96 CPUs. Add an additional Compute Engine preemptible instance with 32 CPUs.

Correct Answer: A

QUESTION 34

For this question, refer to the TerramEarth Games case study.

A new architecture that writes all incoming data to Google BigQuery has been introduced.

You notice that the data is dirty, and want to ensure data quality on an automated daily basis while managing cost.

What should you do ?

- A. Set up a streaming Google Cloud Dataflow job, receiving data by the ingestion process. Clean the data in a Google Cloud Dataflow pipeline.

- B. Create a Google Cloud Function that reads data from Google BigQuery and cleans it. Trigger the Google Cloud Function from a Compute Engine instance.

- C. Create a SQL statement on the data in Google BigQuery, and save it as a view. Run the view daily, and save the result to a new table.

- D. Use Google Cloud Dataprep and configure the Google BigQuery tables as the source. Schedule a daily job to clean the data.

Correct Answer: D

QUESTION 35

For this question, refer to the TerramEarth case study.

Considering the technical requirements, how should you reduce the unplanned vehicle downtime in GCP ?

- A. Use Google BigQuery as the data warehouse. Connect all vehicles to the network and stream data into Google BigQuery using Google Cloud Pub/Sub and Google Cloud Dataflow. Use Google Data Studio for analysis and reporting.

- B. Use Google BigQuery as the data warehouse. Connect all vehicles to the network and upload gzip files to a Multi-Regional Google Cloud Storage bucket using gcloud. Use Google Data Studio for analysis and reporting.

- C. Use Google Cloud Dataproc Hive as the data warehouse. Upload gzip files to a MultiRegional Google Cloud Storage bucket. Upload this data into Google BigQuery using gcloud. Use Google Data Studio for analysis and reporting.

- D. Use Google Cloud Dataproc Hive as the data warehouse. Directly stream data into partitioned Hive tables. Use Pig scripts to analyze data.

Correct Answer: A

QUESTION 36

For this question, refer to the TerramEarth Games case study.

You are asked to design a new architecture for the ingestion of the data of the 200,000 vehicles that are connected to a cellular network. You want to follow Google-recommended practices.

Considering the technical requirements, which components should you use for the ingestion of the data ?

- A. Google Kubernetes Engine with an SSL Ingress.

- B. Google Cloud IoT Core with public/private key pairs.

- C. Google Compute Engine with project-wide SSH keys.

- D. Google Compute Engine with specific SSH keys.

Correct Answer: B

QUESTION 37

For this question, refer to the Dress4Win Games case study.

The Dress4Win security team has disabled external SSH access into production virtual machines (VMs) on Google Cloud Platform (GCP).

The operations team needs to remotely manage the VMs, build and push Docker containers, and manage Google Cloud Storage objects.

What can they do ?

- A. Grant the operations engineer access to use Google Cloud Shell.

- B. Configure a VPN connection to GCP to allow SSH access to the cloud VMs.

- C. Develop a new access request process that grants temporary SSH access to cloud VMs when an operations engineer needs to perform a task.

- D. Have the development team build an API service that allows the operations team to execute specific remote procedure calls to accomplish their tasks.

Correct Answer: A

QUESTION 38

For this question, refer to the Dress4Win Games case study.

At Dress4Win, an operations engineer wants to create a tow-cost solution to remotely archive copies of database backup files.

The database files are compressed tar files stored in their current data center.

How should he proceed ?

- A. Create a cron script using gsutil to copy the files to a Coldline Storage bucket.

- B. Create a cron script using gsutil to copy the files to a Regional Storage bucket.

- C. Create a Google Cloud Storage Transfer Service Job to copy the files to a Coldline Storage bucket.

- D. Create a Google Cloud Storage Transfer Service job to copy the files to a Regional Storage bucket.

Correct Answer: A

Follow these rules of thumb when deciding whether to use gsutil or Storage Transfer Service:

– When transferring data from an on-premises location, use gsutil.

– When transferring data from another cloud storage provider, use Storage Transfer Service.

– Otherwise, evaluate both tools with respect to your specific scenario.

– Use this guidance as a starting point.

The specific details of your transfer scenario will also help you determine which tool is more appropriate.

QUESTION 39

For this question, refer to the Dress4Win Games case study.

Dress4Win has asked you to recommend machine types they should deploy their application servers to.

How should you proceed ?

- A. Perform a mapping of the on-premises physical hardware cores and RAM to the nearest machine types in the cloud.

- B. Recommend that Dress4Win deploy application servers to machine types that offer the highest RAM to CPU ratio available.

- C. Recommend that Dress4Win deploy into production with the smallest instances available, monitor them over time, and scale the machine type up until the desired performance is reached.

- D. Identify the number of virtual cores and RAM associated with the application server virtual machines align them to a custom machine type in the cloud, monitor performance, and scale the machine types up until the desired performance is reached.

Correct Answer: C

QUESTION 40

For this question, refer to the Dress4Win Games case study.

As part of Dress4Win’s plans to migrate to the cloud, they want to be able to set up a managed logging and monitoring system so they can handle spikes in their traffic load.

They want to ensure that:

- The infrastructure can be notified when it needs to scale up and down to handle the ebb and flow of usage throughout the day

- Their administrators are notified automatically when their application reports errors.

- They can filter their aggregated logs down in order to debug one piece of the application across many hosts.

Which Google StackDriver features should they use ?

- A. Logging, Alerts, Insights, Debug

- B. Monitoring, Trace, Debug, Logging

- C. Monitoring, Logging, Alerts, Error Reporting

- D. Monitoring, Logging, Debug, Error Report

Correct Answer: D

QUESTION 41

For this question, refer to the Dress4Win Games case study.

Dress4Win would like to become familiar with deploying applications to the cloud by successfully deploying some applications quickly, as is.

They have asked for your recommendation.

What should you advise ?

- A. Identify self-contained applications with external dependencies as a first move to the cloud.

- B. Identify enterprise applications with internal dependencies and recommend these as a first move to the cloud.

- C. Suggest moving their in-house databases to the cloud and continue serving requests to on-premise applications.

- D. Recommend moving their message queuing servers to the cloud and continue handling requests to on-premise applications.

Correct Answer: C

QUESTION 42

For this question, refer to the Dress4Win Games case study.

Dress4Win has asked you for advice on how to migrate their on-premises MySQL deployment to the cloud.

They want to minimize downtime and performance impact to their on-premises solution during the migration.

Which approach should you recommend ?

- A. Create a dump of the on-premises MySQL master server, and then shut it down, upload it to the cloud environment, and load into a new MySQL cluster.

- B. Setup a MySQL replica server/slave in the cloud environment, and configure it for asynchronous replication from the MySQL master server on-premises until cutover.

- C. Create a new MySQL cluster in the cloud, configure applications to begin writing to both on premises and cloud MySQL masters, and destroy the original cluster at cutover.

- D. Create a dump of the MySQL replica server into the cloud environment, load it into: Google Cloud Datastore, and configure applications to read/write to Google Cloud Datastore at cutover.

Correct Answer: B

QUESTION 43

For this question, refer to the Dress4Win Games case study.

Dress4Win has configured a new uptime check with Google Stackdriver for several of their legacy services.

The Stackdriver dashboard is not reporting the services as healthy.

What should they do ?

- A. Install the Stackdriver agent on all of the legacy web servers.

- B. In the Cloud Platform Console download the list of the uptime servers’ IP addresses and create an inbound firewall rule.

- C. Configure their load balancer to pass through the User-Agent HTTP header when the value matches GoogleStackdriverMonitoring-UptimeChecks. (Google Cloud Monitoring)

- D. Configure their legacy web servers to allow requests that contain user-Agent HTTP header when the value matches GoogleStackdriverMonitoringUptimeChecks. (Google Cloud Monitoring)

Correct Answer: B

QUESTION 44

For this question, refer to the Dress4Win Games case study.

As part of their new application experience, Dress4Wm allows customers to upload images of themselves.

The customer has exclusive control over who may view these images.

Customers should be able to upload images with minimal latency and also be shown their images quickly on the main application page when they log in.

Which configuration should Dress4Win use ?

- A. Store image files in a Google Cloud Storage bucket. Use Google Cloud Datastore to maintain metadata that maps each customer’s ID and their image files.

- B. Store image files in a Google Cloud Storage bucket. Add custom metadata to the uploaded images in Google Cloud Storage that contains the customer’s unique ID.

- C. Use a distributed file system to store customers’ images. As storage needs increase, add more persistent disks and/or nodes. Assign each customer a unique ID, which sets each file’s owner attribute, ensuring privacy of images.

- D. Use a distributed file system to store customers’ images. As storage needs increase, add more persistent disks and/or nodes. Use a Google Cloud SQL database to maintain metadata that maps each customer’s ID to their image files.

Correct Answer: A

QUESTION 45

For this question, refer to the Dress4Win Games case study.

Dress4Win has end-to-end tests covering 100% of their endpoints.

They want to ensure that the move to the cloud does not introduce any new bugs.

Which additional testing methods should the developers employ to prevent an outage ?

- A. They should enable Google Stackdriver Debugger on the application code to show errors in the code.

- B. They should add additional unit tests and production scale load tests on their cloud staging environment.

- C. They should run the end-to-end tests in the cloud staging environment to determine if the code is working as intended.

- D. They should add canary tests so developers can measure how much of an impact the new release causes to latency.

Correct Answer: B

QUESTION 46

For this question, refer to the Dress4Win Games case study.

You want to ensure Dress4Win’s sales and tax records remain available for infrequent viewing by auditors for at least 10 years.

Cost optimization is your top priority.

Which cloud services should you choose ?

- A. Google Cloud Storage Coldline to store the data, and gsutil to access the data.

- B. Google Cloud Storage Nearline to store the data, and gsutil to access the data.

- C. Google Bigtabte with US or EU as location to store the data, and gcloud to access the data.

- D. Google BigQuery to store the data, and a web server cluster in a managed instance group to access the data. Google Cloud SQL mirrored across two distinct regions to store the data, and a Redis cluster in a managed instance group to access the data.

Correct Answer: A

Reference:

– Storage classes

QUESTION 47

For this question, refer to the Dress4Win Games case study.

The current Dress4win system architecture has high latency to some customers because it is located in one data center.

As of a future evaluation and optimizing for performance in the cloud, Dresss4win wants to distribute its system architecture to multiple locations when Google cloud platform.

Which approach should they use ?

- A. Use regional managed instance groups and a global load balancer to increase performance because the regional managed instance group can grow instances in each region separately based on traffic.

- B. Use a global load balancer with a set of virtual machines that forward the requests to a closer group of virtual machines managed by your operations team.

- C. Use regional managed instance groups and a global load balancer to increase reliability by providing automatic failover between zones in different regions.

- D. Use a global load balancer with a set of virtual machines that forward the requests to a closer group of virtual machines as part of a separate managed instance groups.

Correct Answer: A

QUESTION 48

For this question, refer to the Dress4Win Games case study.

Dress4Win is expected to grow to 10 times its size in 1 year with a corresponding growth in data and traffic that mirrors the existing patterns of usage.

The CIO has set the target of migrating production infrastructure to the cloud within the next 6 months.

How will you configure the solution to scale for this growth without making major application changes and still maximize the ROI ?

- A. Migrate the web application layer to Google App Engine, and MySQL to Google Cloud Datastore, and NAS to Google Cloud Storage. Deploy RabbitMQ, and deploy Hadoop servers using Google Cloud Deployment Manager.

- B. Migrate RabbitMQ to Google Cloud Pub/Sub, Hadoop to Google BigQuery, and NAS to Google Compute Engine with Persistent Disk storage. Deploy Tomcat, and deploy Nginx using Google Cloud Deployment Manager.

- C. Implement managed instance groups for Tomcat and Nginx. Migrate MySQL to Google Cloud SQL, RabbitMQ to Google Cloud Pub/Sub, Hadoop to Google Cloud Dataproc, and NAS to Google Compute Engine with Persistent Disk storage.

- D. Implement managed instance groups for the Tomcat and Nginx. Migrate MySQL to Google Cloud SQL, RabbitMQ to Google Cloud Pub/Sub, Hadoop to Google Cloud Dataproc, and NAS to Google Cloud Storage.

Correct Answer: D

QUESTION 49

For this question, refer to the Dress4Win Games case study.

Considering the given business requirements, how would you automate the deployment of web and transactional data layers ?

- A. Deploy Nginx and Tomcat using Google Cloud Deployment Manager to Google Compute Engine. Deploy a Cloud SQL server to replace MySQL. Deploy Jenkins using Google Cloud Deployment Manager.

- B. Deploy Nginx and Tomcat using Google Cloud Launcher. Deploy a MySQL server using Google Cloud Launcher. Deploy Jenkins to Google Compute Engine using Google Cloud Deployment Manager scripts.

- C. Migrate Nginx and Tomcat to Google App Engine. Deploy a Google Cloud Datastore server to replace the MySQL server in a high-availability configuration. Deploy Jenkins to Google Compute Engine using Google Cloud Launcher.

- D. Migrate Nginx and Tomcat to Google App Engine. Deploy a MySQL server using Google Cloud Launcher. Deploy Jenkins to Google Compute Engine using Google Cloud Launcher.

Correct Answer: A

QUESTION 50

For this question, refer to the Dress4Win case study.

Which of the compute services should be migrated as-is and would still be an optimized architecture for performance in the cloud ?

- A. Web applications deployed using Google App Engine standard environment.

- B. RabbitMQ deployed using an unmanaged instance group.

- C. Hadoop/Spark deployed using Google Cloud Dataproc Regional in High Availability mode.

- D. Jenkins, monitoring, bastion hosts, security scanners services deployed on custom machine types.

Correct Answer: A

QUESTION 51

For this question, refer to the Dress4Win Games case study.

To be legally compliant during an audit, Dress4Win must be able to give insights in all administrative actions that modify the configuration or metadata of resources on Google Cloud.

What should you do ?

- A. Use Stackdriver Trace to create a trace list analysis.

- B. Use Stackdriver Monitoring to create a dashboard on the project’s activity.

- C. Enable Cloud Identity-Aware Proxy in all projects, and add the group of Administrators as a member.

- D. Use the Activity page in the Google Cloud Platform Console and Stackdriver Logging to provide the required insight.

Correct Answer: D

QUESTION 52

For this question, refer to the Dress4Win Games case study.

You are responsible for the security of data stored in Google Cloud Storage for your company, Dress4Win.

You have already created a set of Google Groups and assigned the appropriate users to those groups. You should use Google best practices and implement the simplest design to meet the requirements.

Considering Dress4Win’s business and technical requirements, what should you do ?

- A. Assign custom IAM roles to the Google Groups you created in order to enforce security requirements. Encrypt data with a customer-supplied encryption key when storing files in Google Cloud Storage.

- B. Assign custom IAM roles to the Google Groups you created in order to enforce security requirements. Enable default storage encryption before storing files in Google Cloud Storage.

- C. Assign predefined IAM roles to the Google Groups you created in order to enforce security requirements. Utilize Google’s default encryption at rest when storing files in Google Cloud Storage.

- D. Assign predefined IAM roles to the Google Groups you created in order to enforce security requirements. Ensure that the default Google Cloud KMS key is set before storing files in Google Cloud Storage.

Correct Answer: C

QUESTION 53

For this question, refer to the Dress4Win Games case study.

You want to ensure that your on-premises architecture meets business requirements before you migrate your solution.

What change in the on-premises architecture should you make ?

- A. Replace RabbitMQ with Google Cloud Pub/Sub.

- B. Downgrade MySQL to v5.7, which is supported by Google Cloud SQL for MySQL.

- C. Resize compute resources to match predefined Google Compute Engine machine types.

- D. Containerize the micro-services and host them in Google Kubernetes Engine.

Correct Answer: C

QUESTION 54

Your company’s test suite is a custom C++ application that runs tests throughout each day on Linux virtual machines.

The full test suite takes several hours to complete, running on a limited number of on-premises servers reserved for testing. Your company wants to move the testing infrastructure to the cloud, to reduce the amount of time it takes to fully test a change to the system, while changing the tests as little as possible.

Which cloud infrastructure should you recommend ?

- A. Google Compute Engine unmanaged instance groups and Network Load Balancer.

- B. Google Compute Engine managed instance groups with auto-scaling.

- C. Google Cloud Dataproc to run Apache Hadoop jobs to process each test.

- D. Google App Engine with Google StackDriver for logging.

Correct Answer: B

Google Compute Engine enables users to launch virtual machines (VMs) on demand. VMs can be launched from the standard images or custom images created by users.

Managed instance groups offer autoscaling capabilities that allow you to automatically add or remove instances from a managed instance group based on increases or decreases in load. Autoscaling helps your applications gracefully handle increases in traffic and reduces cost when the need for resources is lower.

Incorrect Answers:

B: There is no mention of incoming IP data traffic for the custom C++ applications.

C: Apache Hadoop is not fit for testing C++ applications. Apache Hadoop is an open-source software framework used for distributed storage and processing of datasets of big data using the MapReduce programming model.

D: Google App Engine is intended to be used for web applications.

Google App Engine (often referred to as GAE or simply App Engine) is a web framework and cloud computing platform for developing and hosting web applications in Google-managed data centers.

Reference:

– Autoscaling groups of instances

QUESTION 55

A recent audit revealed that a new network was created in your GCP project. In this network, a Google Compute Engine (GCE) instance has an SSH port open to the world.

You want to discover this network’s origin.

What should you do ?

- A. Search for Create VM entry in the Stackdriver alerting console.

- B. Navigate to the Activity page in the Home section. Set category to Data Access and search for Create VM entry.

- C. In the Logging section of the console, specify GCE Network as the logging section. Search for the Create Insert entry.

- D. Connect to the GCE instance using project SSH keys. Identify previous logins in system logs, and match these with the project owners list.

Correct Answer: C

Incorrect Answers:

A: To use the Stackdriver alerting console we must first set up alerting policies.

B: Data access logs only contain read-only operations.

Audit logs help you determine who did what, where, and when.

Google Cloud Audit Logging returns two types of logs:

Admin activity logs

Data access logs: Contains log entries for operations that perform read-only operations do not modify any data, such as get, list, and aggregated list methods.

QUESTION 56

Your company runs several databases on a single MySQL instance.

They need to take backups of a specific database at regular intervals. The backup activity needs to complete as quickly as possible and cannot be allowed to impact disk performance.

How should you configure the storage ?

- A. Configure a cron job to use the gcloud tool to take regular backups using persistent disk snapshots.

- B. Mount a Local SSD volume as the backup location. After the backup is complete, use gsutil to move the backup to Google Cloud Storage.

- C. Use gcsfise to mount a Google Cloud Storage bucket as a volume directly on the instance and write backups to the mounted location using mysqldump.

- D. Mount additional persistent disk volumes onto each virtual machine (VM) instance in a RAID10 array and use LVM to create snapshots to send to Google Cloud Storage.

Correct Answer: B

QUESTION 57

You want to enable your running Google Kubernetes Engine cluster to scale as demand for your application changes.

What should you do ?

- A. Add additional nodes to your Kubernetes Engine cluster using the following command:

- gcloud container clusters resize

- CLUSTER_Name – -size 10

- B. Add a tag to the instances in the cluster with the following command:

- gcloud compute instances add-tags

- INSTANCE – -tags enableautoscaling max-nodes-10

- C. Update the existing Kubernetes Engine cluster with the following command:

- gcloud alpha container clusters

- update mycluster – -enableautoscaling – -min-nodes=1 – -max-nodes=10

- D. Create a new Kubernetes Engine cluster with the following command:

- gcloud alpha container clusters

- create mycluster – -enableautoscaling – -min-nodes=1 – -max-nodes=10

- and redeploy your application

Correct Answer: C

QUESTION 58

The operations manager asks you for a list of recommended practices that she should consider when migrating a J2EE application to the cloud.

Which three practices should you recommend ? (Choose 3 answers.)

- A. Port the application code to run on Google App Engine.

- B. Integrate Google Cloud Dataflow into the application to capture real-time metrics.

- C. Instrument the application with a monitoring tool like Stackdriver Debugger.

- D. Select an automation framework to reliably provision the cloud infrastructure.

- E. Deploy a continuous integration tool with automated testing in a staging environment.

- F. Migrate from MySQL to a managed NoSQL database like Google Cloud Datastore or Google Cloud Bigtable.

Correct Answer: A, D, E

References:

– Deploying a Java App

– Getting Started: Cloud SQL

QUESTION 59

Your company wants to track whether someone is present in a meeting room reserved for a scheduled meeting.

There are 1,000 meeting rooms across 5 offices on 3 continents. Each room is equipped with a motion sensor that reports its status every second.

The data from the motion detector includes only a sensor ID and several different discrete items of information. Analysts will use this data, together with information about account owners and office locations.

Which database type should you use ?

- A. Flat file

- B. NoSQL

- C. Relational

- D. Blobstore

Correct Answer: B

Relational databases were not designed to cope with the scale and agility challenges that face modern applications, nor were they built to take advantage of the commodity storage and processing power available today.

NoSQL fits well for:

Developers are working with applications that create massive volumes of new, rapidly changing data types — structured, semi-structured, unstructured and polymorphic data.

Incorrect Answers:

D: The Blobstore API allows your application to serve data objects, called blobs, that are much larger than the size allowed for objects in the Datastore service.

Blobs are useful for serving large files, such as video or image files, and for allowing users to upload large data files.

Reference:

– NoSQL Databases Explained

QUESTION 60

Your customer is receiving reports that their recently updated Google App Engine application is taking approximately 30 seconds to load for some of their users.

This behavior was not reported before the update.

What strategy should you take ?

- A. Work with your ISP to diagnose the problem.

- B. Open a support ticket to ask for network capture and flow data to diagnose the problem, then roll back your application.

- C. Roll back to an earlier known good release initially, then use Stackdriver Trace and Logging to diagnose the problem in a development/test/staging environment.

- D. Roll back to an earlier known good release, then push the release again at a quieter period to investigate. Then use Stackdriver Trace and Logging to diagnose the problem.

Correct Answer: C

Stackdriver Logging allows you to store, search, analyze, monitor, and alert on log data and events from Google Cloud Platform and Amazon Web Services (AWS).

Our API also allows ingestion of any custom log data from any source. Stackdriver Logging is a fully managed service that performs at scale and can ingest application and system log data from thousands of VMs. Even better, you can analyze all that log data in real time.

Reference:

– Google Cloud Logging

QUESTION 61

A production database virtual machine on Google Compute Engine has an ext4-formatted persistent disk for data files.

The database is about to run out of storage space.

How can you remediate the problem with the least amount of downtime ?

- A. In the Google Cloud Platform Console, increase the size of the persistent disk and use the resize2fs command in Linux.

- B. Shut down the virtual machine, use the Google Cloud Platform Console to increase the persistent disk size, then restart the virtual machine.

- C. In the Google Cloud Platform Console, increase the size of the persistent disk and verify the new space is ready to use with the fdisk command in Linux.

- D. In the Google Cloud Platform Console, create a new persistent disk attached to the virtual machine, format and mount it, and configure the database service to move the files to the new disk.

- E. In the Google Cloud Platform Console, create a snapshot of the persistent disk restore the snapshot to a new larger disk, unmount the old disk, mount the new disk and restart the database service.

Correct Answer: A

On Linux instances, connect to your instance and manually resize your partitions and file systems to use the additional disk space that you added.

Extend the file system on the disk or the partition to use the added space. If you grew a partition on your disk, specify the partition. If your disk does not have a partition table, specify only the disk ID.

sudo resize2fs /dev/[DISK_ID][PARTITION_NUMBER]

where [DISK_ID] is the device name and [PARTITION_NUMBER] is the partition number for the device where you are resizing the file system.

Reference:

– Adding or resizing zonal persistent disks

QUESTION 62

Your company is forecasting a sharp increase in the number and size of Apache Spark and Hadoop jobs being run on your local datacenter.

You want to utilize the cloud to help you scale this upcoming demand with the least amount of operations work and code change.

Which product should you use ?

- A. Google Cloud Dataflow

- B. Google Cloud Dataproc

- C. Google Compute Engine

- D. Google Kubernetes Engine

Correct Answer: B

Google Cloud Dataproc is a fast, easy-to-use, low-cost and fully managed service that lets you run the Apache Spark and Apache Hadoop ecosystem on Google Cloud Platform. Google Cloud Dataproc provisions big or small clusters rapidly, supports many popular job types, and is integrated with other Google Cloud Platform services, such as Google Cloud Storage and Stackdriver Logging, thus helping you reduce TCO.

Reference:

– Dataproc FAQ

QUESTION 63

You want to optimize the performance of an accurate, real-time, weather-charting application.

The data comes from 50,000 sensors sending 10 readings a second, in the format of a timestamp and sensor reading.

Where should you store the data ?

- A. Google BigQuery

- B. Google Cloud SQL

- C. Google Cloud Bigtable

- D. Google Cloud Storage

Correct Answer: C

Google Cloud Bigtable is a scalable, fully-managed NoSQL wide-column database that is suitable for both real-time access and analytics workloads.

Good for:

Low-latency read/write access

High-throughput analytics

Native time series support

Common workloads:

– IoT, finance, adtech

– Personalization, recommendations

– Monitoring

– Geospatial datasets

– Graphs

Reference:

– Google Cloud storage products

QUESTION 64

A small number of API requests to your microservices-based application take a very long time.

You know that each request to the API can traverse many services. You want to know which service takes the longest in those cases.

What should you do ?

- A. Set timeouts on your application so that you can fail requests faster.

- B. Send custom metrics for each of your requests to Stackdriver Monitoring.

- C. Use Stackdriver Monitoring to look for insights that show when your API latencies are high.

- D. Instrument your application with Stackdriver Trace in order to break down the request latencies at each microservice.

Correct Answer: D

Reference:

– Quickstart: Find a trace

QUESTION 65

Auditors visit your teams every 12 months and ask to review all the Cloud Identity and Access Management (Google Cloud IAM) policy changes in the previous 12 months.

You want to streamline and expedite the analysis and audit process.

What should you do ?

- A. Create custom Google Stackdriver alerts and send them to the auditor.

- B. Enable Logging export to Google BigQuery and use ACLs and views to scope the data shared with the auditor.

- C. Use cloud functions to transfer log entries to Google Cloud SQL and use ACLs and views to limit an auditor’s view.

- D. Enable Google Cloud Storage (GCS) log export to audit logs into a GCS bucket and delegate access to the bucket.

Correct Answer: D

QUESTION 66

A lead engineer wrote a custom tool that deploys virtual machines in the legacy data center.

He wants to migrate the custom tool to the new cloud environment. You want to advocate for the adoption of Google Cloud Deployment Manager.

What are two business risks of migrating to GoogleCloud Deployment Manager ? (Choose 2 answers.)

- A. Google Cloud Deployment Manager uses Python.

- B. Google Cloud Deployment Manager APIs could be deprecated in the future.

- C. Google Cloud Deployment Manager is unfamiliar to the company’s engineers

- D. Google Cloud Deployment Manager requires a Google APIs service account to run.

- E. Google Cloud Deployment Manager can be used to permanently delete cloud resources.

- F. Google Cloud Deployment Manager only supports automation of Google Cloud resources.

Correct Answer: B, F

QUESTION 67

Your organization has a 3-tier web application deployed in the same network on Google Cloud Platform.

Each tier (web, API, and database) scales independently of the others. Network traffic should flow through the web to the API tier and then on to the database tier. Traffic should not flow between the web and the database tier.

How should you configure the network ?

- A. Add each tier to a different subnetwork.

- B. Set up software based firewalls on individual VMs.

- C. Add tags to each tier and set up routes to allow the desired traffic flow.

- D. Add tags to each tier and set up firewall rules to allow the desired traffic flow.

Correct Answer: D

Google Cloud Platform(GCP) enforces firewall rules through rules and tags. GCP rules and tags can be defined once and used across all regions.

Reference:

– Google Cloud Platform for OpenStack Users

– AWS News Blog: Building three-tier architectures with security groups

QUESTION 68

Your development team has installed a new Linux kernel module on the batch servers in Google Compute Engine (GCE) virtual machines (VMs) to speed up the nightly batch process.

Two days after the installation, 50% of the batch servers failed the nightly batch run.

You want to collect details on the failure to pass back to the development team.

Which three actions should you take ? (Choose 3 answers.)

- A. Use Stackdriver Logging to search for the module log entries.

- B. Read the debug GCE Activity log using the API or Google Cloud Console.

- C. Use gcloud or Cloud Console to connect to the serial console and observe the logs.

- D. Identify whether a live migration event of the failed server occurred, using in the activity log.

- E. Adjust the Google Stackdriver timeline to match the failure time, and observe the batch server metrics.

- F. Export a debug VM into an image, and run the image on a local server where kernel log messages will be displayed on the native screen.

Correct Answer: A, C, E

QUESTION 69

Your company wants to try out the cloud with low risk.

They want to archive approximately 100 TB of their log data to the cloud and test the analytics features available to them there, while also retaining that data as a long-term disaster recovery backup.

Which two steps should you take ? (Choose 2 answers.)

- A. Load logs into Google BigQuery.

- B. Load logs into Google Cloud SQL.

- C. Import logs into Google Stackdriver.

- D. Insert logs into Google Cloud Bigtable.

- E. Upload log files into Google Cloud Storage.

Correct Answer: A, E

QUESTION 70

You deploy your custom Java application to Google App Engine. It fails to deploy and gives you the following stack trace.

What should you do ?

java.lang.SecurityException: SHA1 diest digest error for com/Altostart/CloakeServlet.class

at com.google.appengine.runtime.Request.prosess-d36f818a24b8cf1d (Request.java)

at sun.security.util.ManifestEntryVerifier.verify (ManifestEntryVerifier.java:210)

at.java.util.har.JarVerifier.prosessEntry (JarVerifier.java:218)

at java.util.jar.JarVerifier.update (JarVerifier.java:205)

at java.util.jar.JarVerifiersVerifierStream.read (JarVerifier.java:428)

at sun.misc.Resource.getBytes (Resource.java:124)

at java.net.URL.ClassLoader.defineClass (URCClassLoader.java:273)

at sun.reflect.GenerateMethodAccessor5.invoke (Unknown Source)

at sun.reflect.DelegatingMethodAccessorImp1.invoke (DelegatingMethodAccessorImp1.java:43)

at java.lang.reflect.Method.invoke (Method.java:616)

at java.lang.ClassLoader.loadClass (ClassLoader.java:266)- A. Upload missing JAR files and redeploy your application.

- B. Digitally sign all of your JAR files and redeploy your application.

- C. Recompile the CLoakedServlet class using and MD5 hash instead of SHA1.

Correct Answer: B

QUESTION 71

Your customer support tool logs all email and chat conversations to Google Cloud Bigtable for retention and analysis.

What is the recommended approach for sanitizing this data of personally identifiable information or payment card information before initial storage ?

- A. Hash all data using SHA256.

- B. Encrypt all data using elliptic curve cryptography.

- C. De-identify the data with the Google Cloud Data Loss Prevention API.

- D. Use regular expressions to find and redact phone numbers, email addresses, and credit card numbers.

Correct Answer: C

Reference:

– PCI Data Security Standard compliance: Using Cloud Data Loss Prevention to sanitize data

QUESTION 72

You are using Cloud Shell and need to install a custom utility for use in a few weeks.

Where can you store the file so it is in the default execution path and persists across sessions ?

- A. ~/bin

- B. Google Cloud Storage

- C. /google/scripts

- D. /usr/local/bin

Correct Answer: A

QUESTION 73

You want to create a private connection between your instances on Compute Engine and your on-premises data center.

You require a connection of at least 20 Gbps. You want to follow Google-recommended practices.

How should you set up the connection ?

- A. Create a VPC and connect it to your on-premises data center using Dedicated Interconnect.

- B. Create a VPC and connect it to your on-premises data center using a single Google Cloud VPN.

- C. Create a Google Cloud Content Delivery Network (Google Cloud CDN) and connect it to your on-premises data center using Dedicated Interconnect.

- D. Create a Google Cloud Content Delivery Network (Google Cloud CDN) and connect it to your on-premises datacenter using a single Google Cloud VPN.

Correct Answer: A

QUESTION 74

You are analyzing and defining business processes to support your startup’s trial usage of GCP, and you don’t yet know what consumer demand for your product will be.

Your manager requires you to minimize GCP service costs and adhere to Google best practices.

What should you do ?

- A. Utilize free tier and sustained use discounts. Provision a staff position for service cost management.

- B. Utilize free tier and sustained use discounts. Provide training to the team about service cost management.

- C. Utilize free tier and committed use discounts. Provision a staff position for service cost management.

- D. Utilize free tier and committed use discounts. Provide training to the team about service cost management.

Correct Answer: B

QUESTION 75

You are building a continuous deployment pipeline for a project stored in a Git source repository and want to ensure that code changes can be verified deploying to production.

What should you do ?

- A. Use Spinnaker to deploy builds to production using the red/black deployment strategy so that changes can easily be rolled back.

- B. Use Spinnaker to deploy builds to production and run tests on production deployments.

- C. Use Jenkins to build the staging branches and the master branch. Build and deploy changes to production for 10% of users before doing a complete rollout.

- D. Use Jenkins to monitor tags in the repository. Deploy staging tags to a staging environment for testing. After testing, tag the repository for production and deploy that to the production environment.

Correct Answer: C

Reference:

– Github: Lab: Build a Continuous Deployment Pipeline with Jenkins and Kubernetes

QUESTION 76

Your company is migrating its on-premises data center into the cloud.

As part of the migration, you want to integrate Google Kubernetes Engine (GKE) for workload orchestration. Parts of your architecture must also be PCI DSS-compliant.

Which of the following is most accurate ?

- A. App Engine is the only compute platform on GCP that is certified for PCI DSS hosting.

- B. GKE cannot be used under PCI DSS because it is considered shared hosting.

- C. GKE and GCP provide the tools you need to build a PCI DSS-compliant environment.

- D. All Google Cloud services are usable because Google Cloud Platform is certified PCI-compliant.

Correct Answer: C

QUESTION 77

Google Cloud Platform resources are managed hierarchically using organization, folders, and projects.

When Cloud Identity and Access Management (IAM) policies exist at these different levels, what is the effective policy at a particular node of the hierarchy ?

- A. The effective policy is determined only by the policy set at the node.